Discover more about PhenoRob: The PhenoRob paper videos present the most important findings published in high-ranked journals.

Action- or results-based payments for ecosystem services in the era of smart weeding robots?

Anna Massfeller, PhD student at the Institute for Food and Resource Economics (ILR), University of Bonn, presents her publication on “Action- or results-based payments for ecosystem services in the era of smart weeding robots?”. The video is based on the following paper: Massfeller, A., Zingsheim, M., Ahmadi, A., Martinsson, E., Storm, H., 2025. Action- or results-based payments for ecosystem services in the era of smart weeding robots? Biological Conservation 302, 110998. https://doi.org/10.1016/j.biocon.2025.110998

Field observation and verbal exchange as peer effects in farmers’ technology adoption decisions

Anna Massfeller, PhD student at the Institute for Food and Resource Economics (ILR), University of Bonn, presents her publication on “Field observation and verbal exchange as different peer effects in farmers’ technology adoption decisions”. The video is based on the following paper: Massfeller, A., Storm, H., 2024. Field observation and verbal exchange as different peer effects in farmers’ technology adoption decisions. Agric. Econ. https://doi.org/10.1111/agec.12847

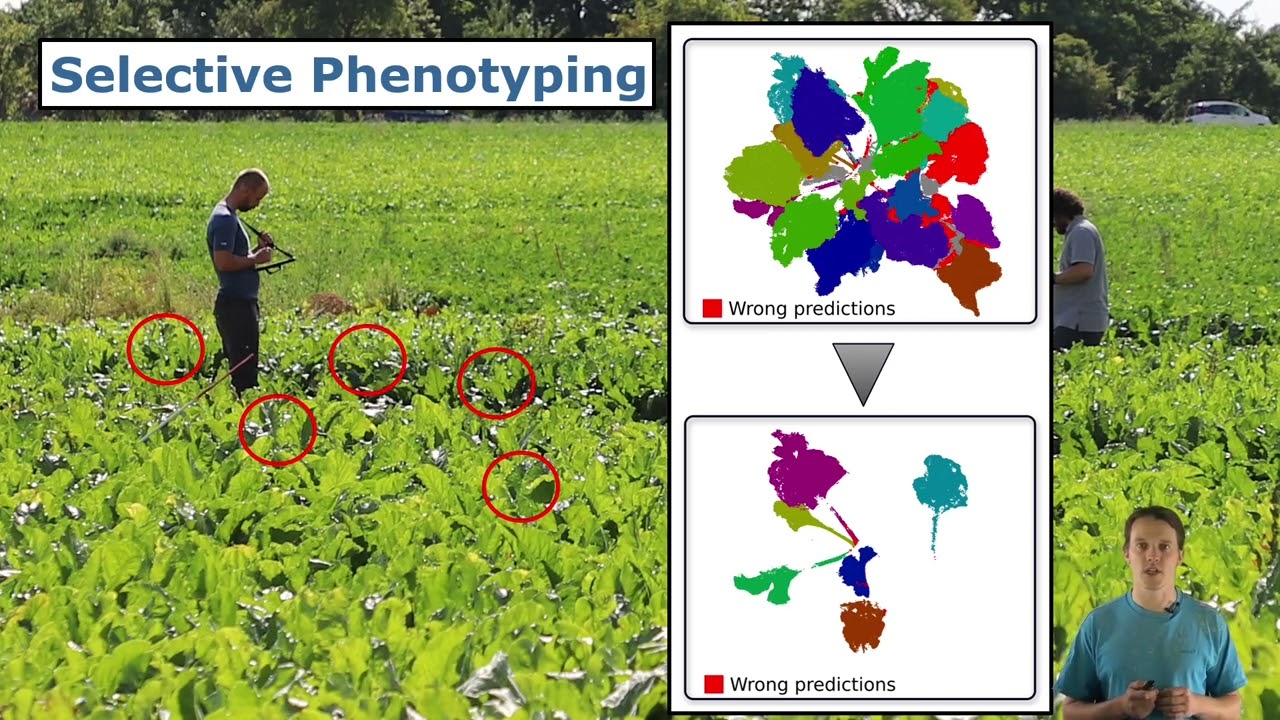

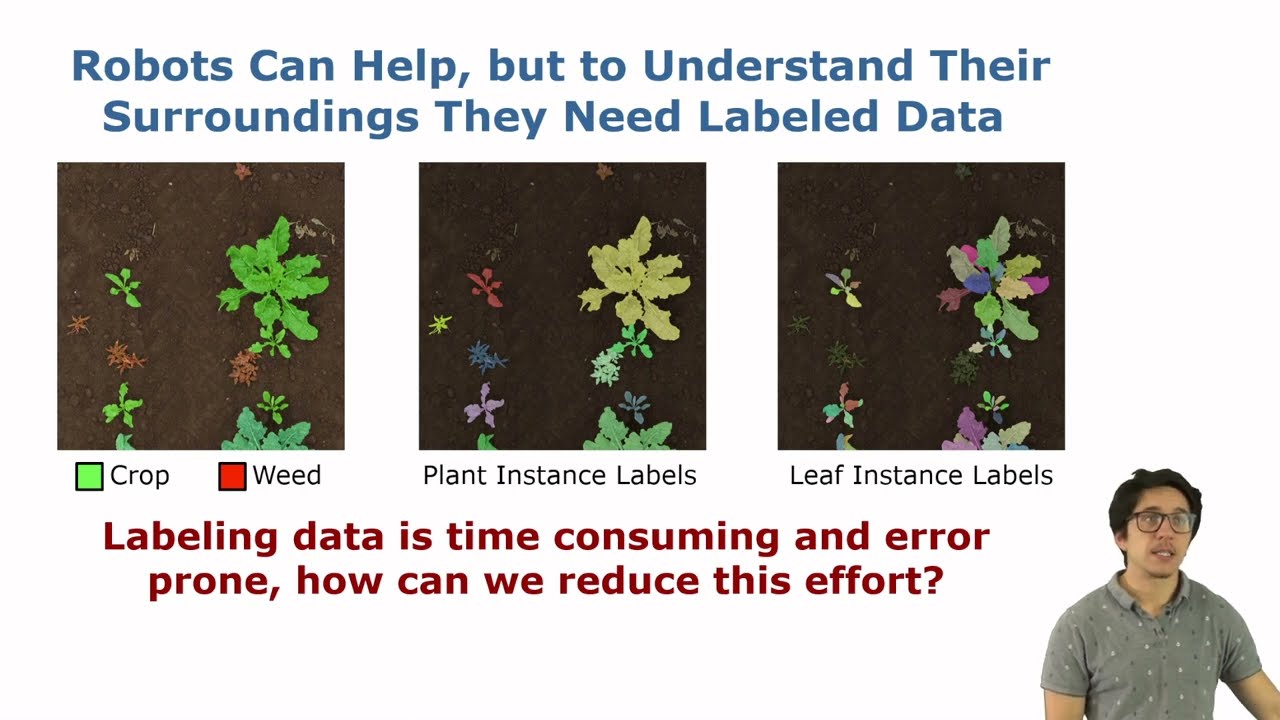

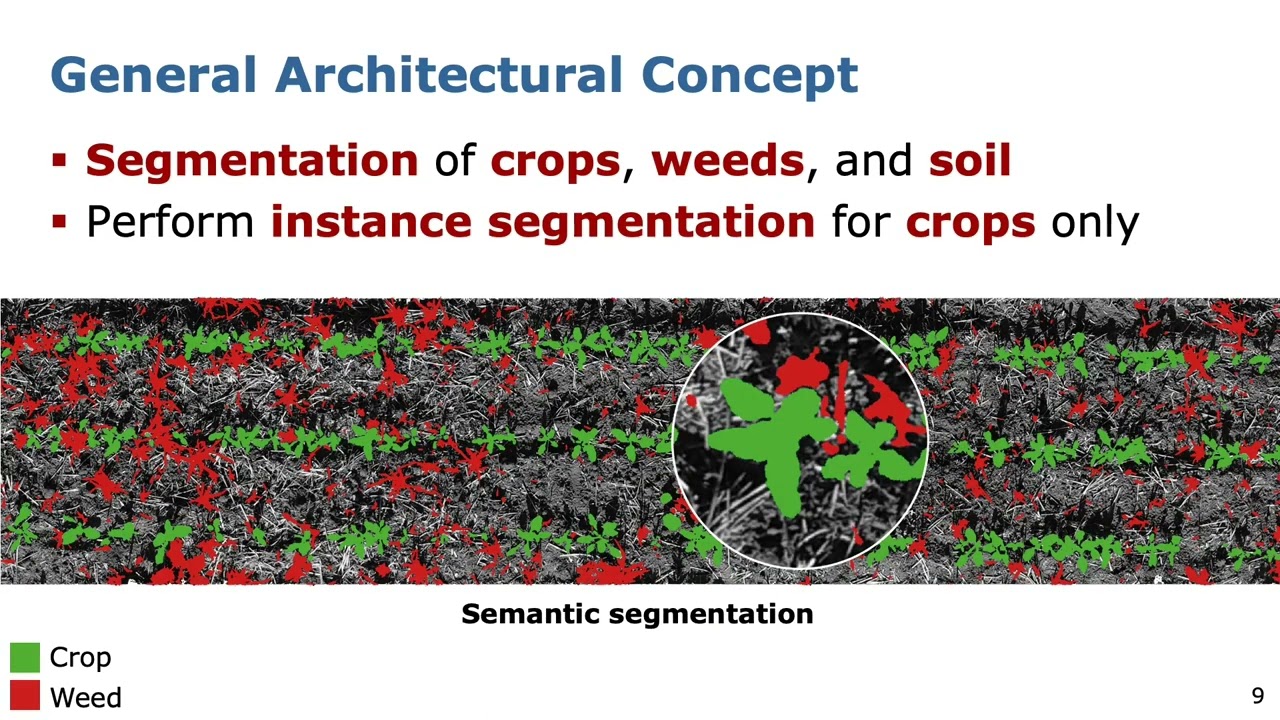

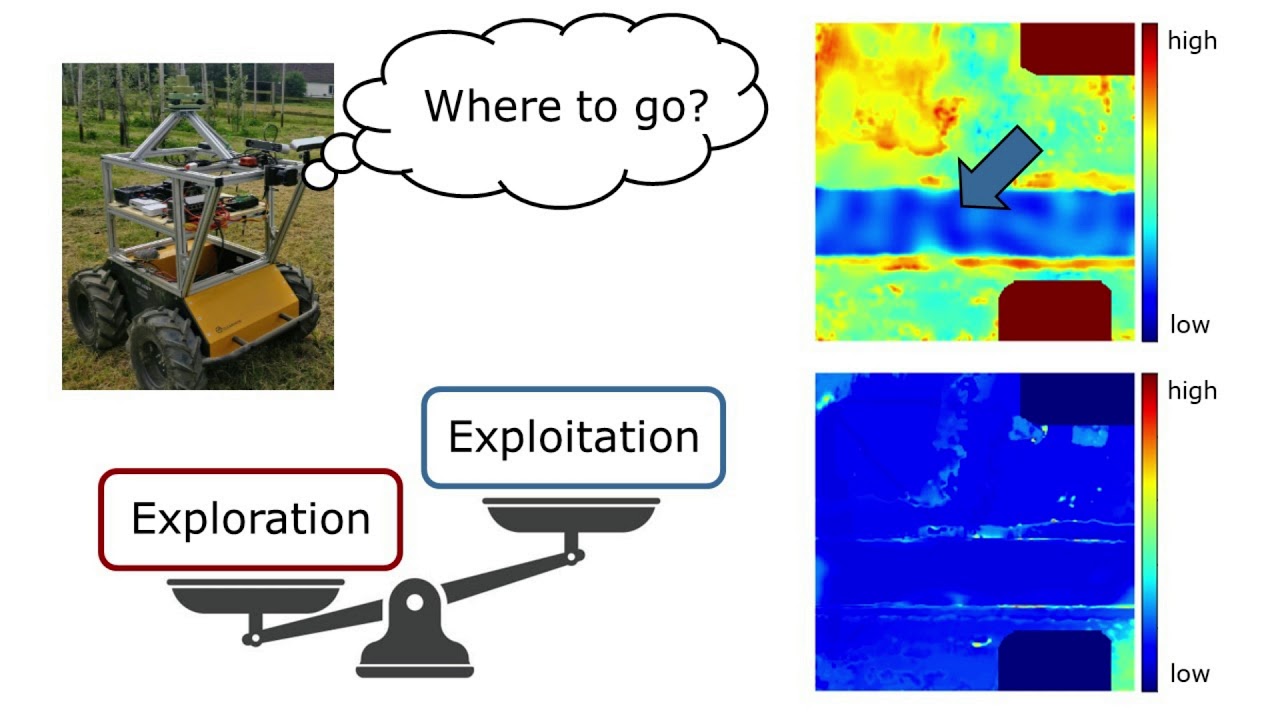

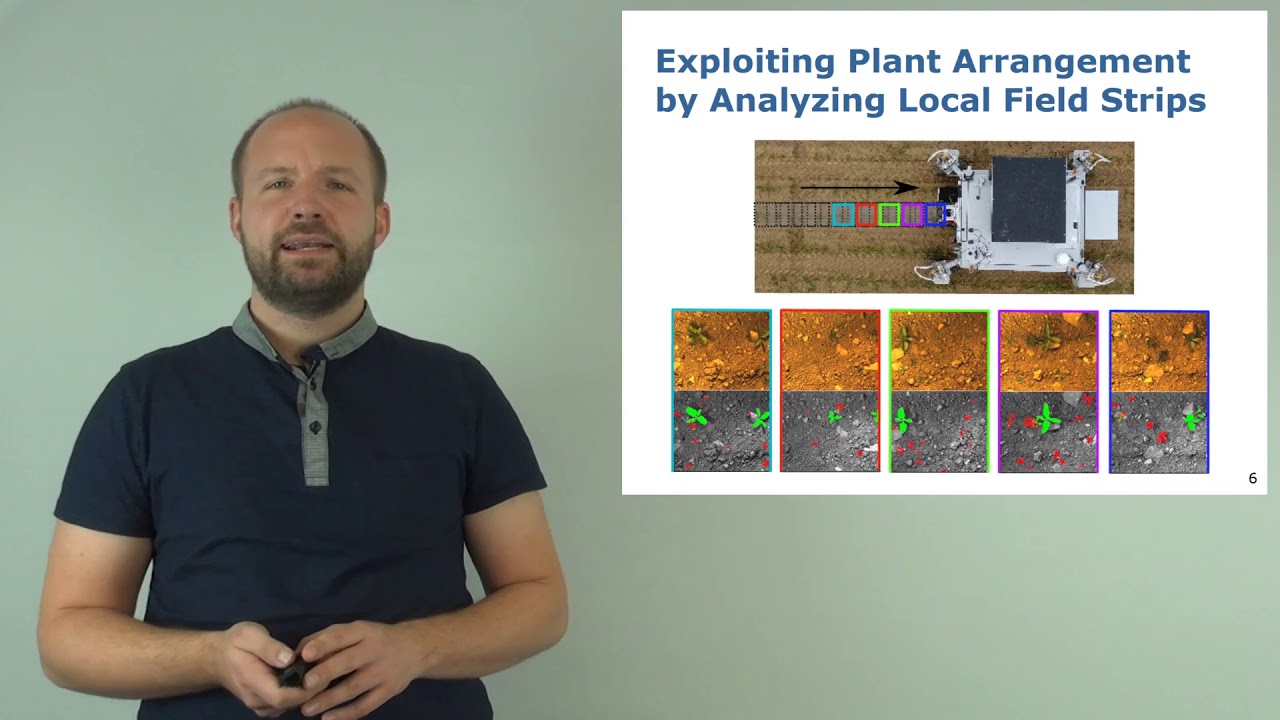

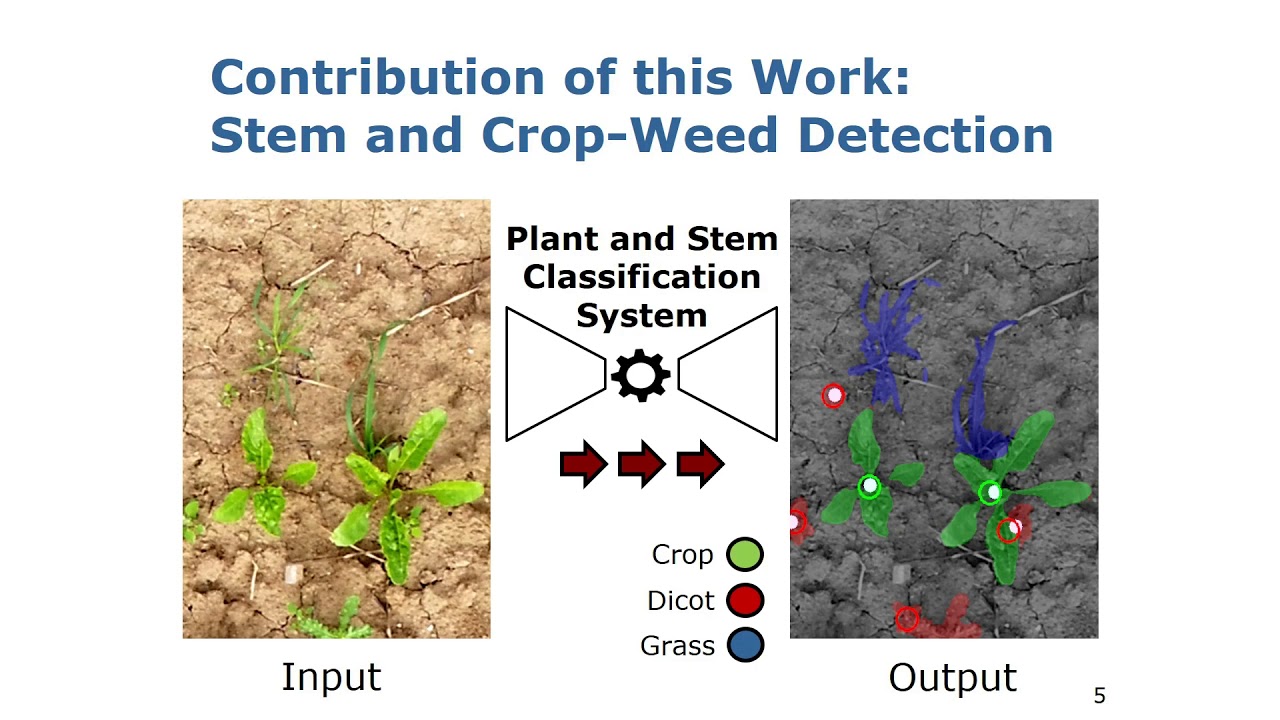

Unsupervised semantic label generation in agricultural fields

Gianmarco Roggiolani, PhD student at the Institute of Geodesy and Geoinformation (IGG), University of Bonn, presents his paper on “Unsupervised semantic label generation in agricultural fields”. The video is based on the following paper: G. Roggiolani, J.Rückin, M. Popović, J. Behley, and C. Stachniss, “Unsupervised Semantic Label Generation in Agricultural Fields,” Frontiers in Robotics and AI, 2025. PDF: https://lnkd.in/emtfSGSG CODE: https://lnkd.in/eW2_ZknG

Trailer: Knowledge Distillation for Efficient Panoptic Semantic Segmentation

Trailer for the paper: M. Li, M. Hasltead and C. McCool, “Knowledge Distillation for Efficient Panoptic Semantic Segmentation: Applied to Agriculture,” 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Detroit, MI, USA, 2023, pp. 4204-4211, doi: 10.1109/IROS55552.2023.10342527.

PhenoRob: Research Priorities to Leverage Smart Digital Technologies for Sustainable Crop Production

Agriculture today faces significant challenges that require new ways of thinking, such as smart digital technologies that enable innovative approaches. However, research gaps limit their potential to improve agriculture. In our PhenoRob paper “Research Priorities to Leverage Smart Digital Technologies for Sustainable Crop Production”, Sabine Seidel, Hugo Storm and Lasse Klingbeil outline an interdisciplinary agenda to address the key research gaps and advance sustainability in agriculture. They identify four critical areas: 1. Monitoring to detect weeds and the status of surrounding crops 2. Modelling to predict the yield impact and ecological impacts 3. Decision making by weighing the yield loss against the ecological impact 4. Model uptake, for example policy support to compensate farmers for ecological benefits Closing these gaps requires strong interdisciplinary collaboration. In PhenoRob, this is achieved through five core experiments, seminar and lecture series, and interdisciplinary undergraduate and graduate teaching activities. The paper is available at: H. Storm, S. J. Seidel, L. Klingbeil, F. Ewert, H. Vereecken, W. Amelung, S. Behnke, M. Bennewitz, J. Börner, T. Döring, J. Gall, A. -K. Mahlein, C. McCool, U. Rascher, S. Wrobel, A. Schnepf, C. Stachniss, and H. Kuhlmann, “Research Priorities to Leverage Smart Digital Technologies for Sustainable Crop Production,” European Journal of Agronomy, vol. 156, p. 127178, 2024. doi:10.1016/j.eja.2024.127178 https://www.sciencedirect.com/science/article/pii/S1161030124000996?via%3Dihub

ECCV’24: An Adaptive Screen-Space Meshing Approach for Normal Integration

Project Page: https://moritzheep.github.io/adaptive-screen-meshing/

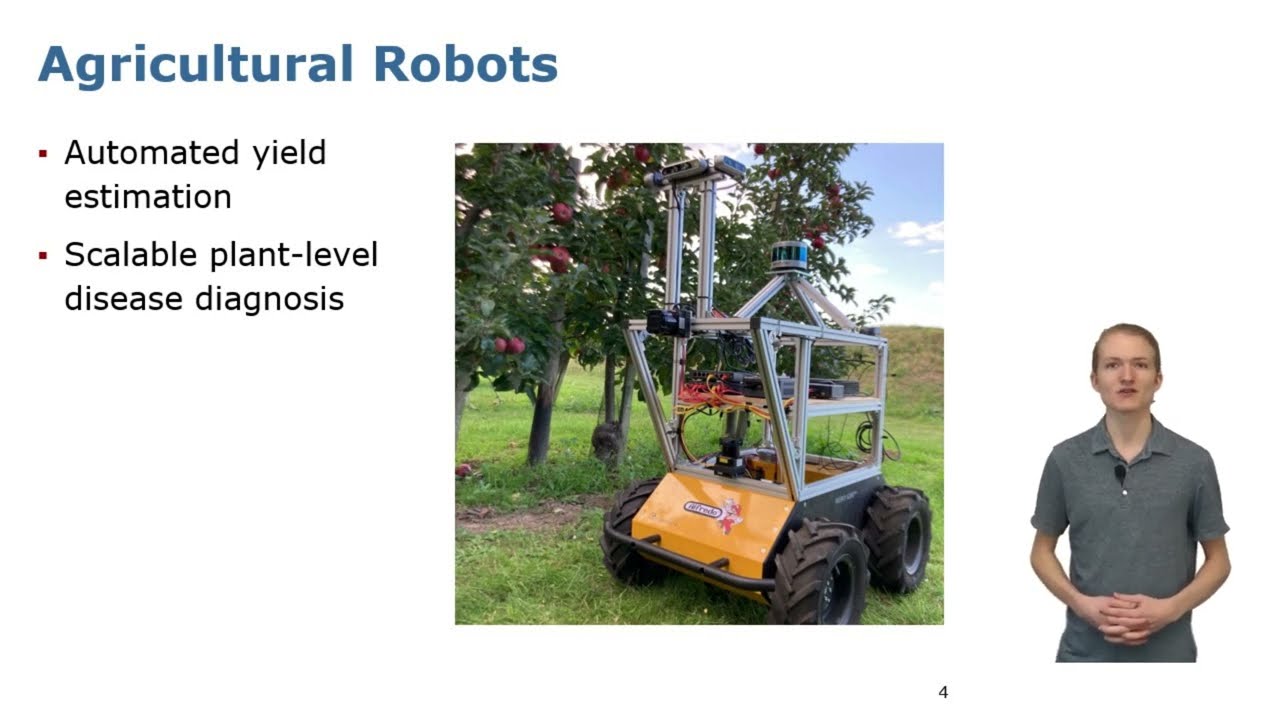

Positive public attitudes towards agricultural robots

Hendrik Zeddies, PhD student at the Center for Development Research, University of Bonn, presents his publication on the “Positive public attitudes towards agricultural robots”. The video is based on the following paper: Zeddies, H.H., Busch, G. & Qaim, M. Positive public attitudes towards agricultural robots. Sci Rep 14, 15607 (2024). https://doi.org/10.1038/s41598-024-66198-4

Effect of Different Postharvest Methods on Essential Oil Content and Composition of Mentha Genotypes

Charlotte Hubert-Schöler, PhD student at the Institute of Crop Science and Resource Conservation (INRES), University of Bonn, presents her paper on the “Effect of Different Postharvest Methods on Essential Oil Content and Composition of Three Mentha Genotypes” as part of the “Quality Assessment of Aromatic Plants by Hyperspectral and Mass spectrometric methods (QuAAP)” project. Hubert, C.; Tsiaparas, S.; Kahlert, L.; Luhmer, K.; Moll, M.D.; Passon, M.; Wüst, M.; Schieber, A.; Pude, R. Effect of Different Postharvest Methods on Essential Oil Content and Composition of Three Mentha Genotypes. Horticulturae 2023, 9, 960. https://doi.org/10.3390/horticulturae9090960

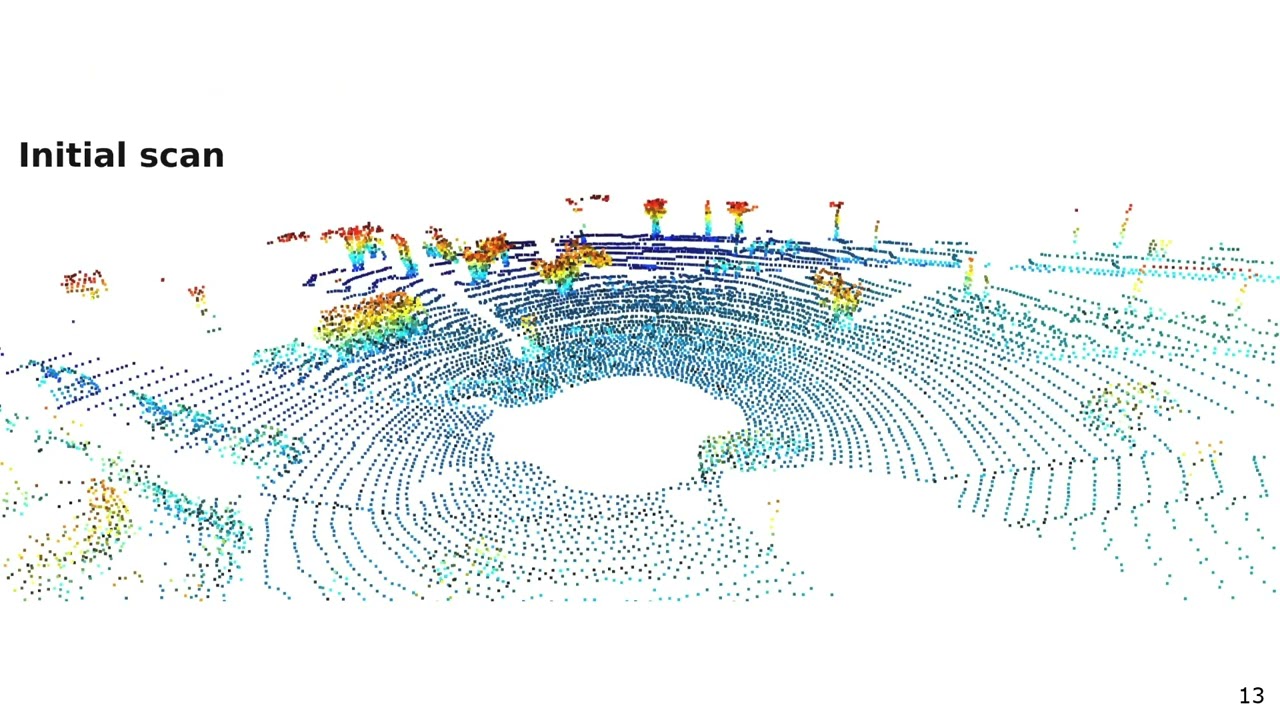

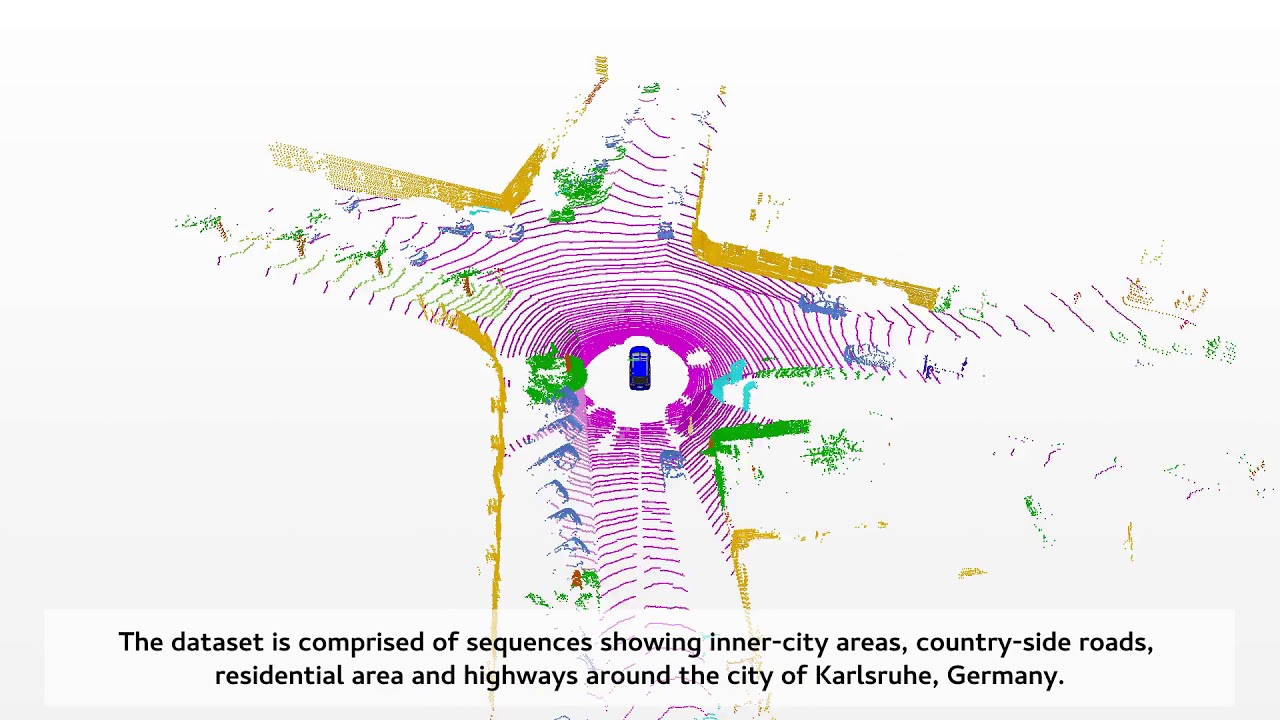

Trailer: Scaling Diffusion Models to Real-World 3D LiDAR Scene Completion (CVPR’24)

Trailer for the paper: L. Nunes, R. Marcuzzi, B. Mersch, J. Behley, and C. Stachniss, “Scaling Diffusion Models to Real-World 3D LiDAR Scene Completion,” in Proc. of the IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), 2024.

Talk by L. Nunes: Scaling Diffusion Models to Real-World 3D LiDAR Scene Completion (CVPR’24)

CVPR 2024 Talk by Lucas Nunes about the paper: L. Nunes, R. Marcuzzi, B. Mersch, J. Behley, and C. Stachniss, “Scaling Diffusion Models to Real-World 3D LiDAR Scene Completion,” in Proc. of the IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), 2024. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/nunes2024cvpr.pdf CODE: https://github.com/PRBonn/LiDiff

Talk by M. Sodano: Open-World Semantic Segmentation Including Class Similarity (CVPR’24)

CVPR 2024 Talk by Matteo Sodano about the paper: M. Sodano, F. Magistri, L. Nunes, J. Behley, and C. Stachniss, “Open-World Semantic Segmentation Including Class Similarity,” in Proc. of the IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), 2024. PAPER: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/sodano2024cvpr.pdf CODE: https://github.com/PRBonn/ContMAV

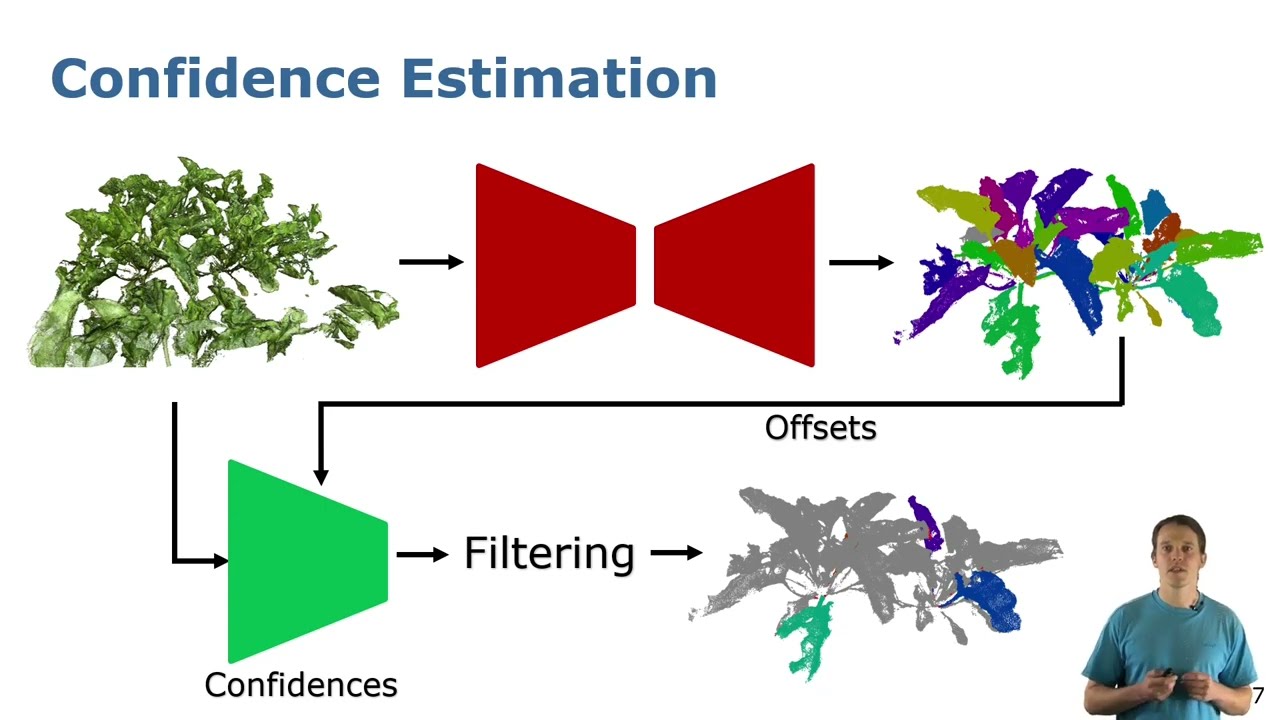

Trailer: High Precision Leaf Instance Segmentation in Point Clouds Obtained Under Real Field…

Trailer for the paper: E. Marks, M. Sodano, F. Magistri, L. Wiesmann, D. Desai, R. Marcuzzi, J. Behley, and C. Stachniss, “High Precision Leaf Instance Segmentation in Point Clouds Obtained Under Real Field Conditions,” IEEE Robotics and Automation Letters (RA-L), vol. 8, iss. 8, pp. 4791-4798, 2023. doi:10.1109/LRA.2023.3288383 PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/marks2023ral.pdf CODE: https://github.com/PRBonn/plant_pcd_segmenter VIDEO: https://youtu.be/dvA1SvQ4iEY TALK: https://youtu.be/_k3vpYl-UW0

Talk by E. Marks: High Precision Leaf Instance Segmentation in Point Clouds Obtained Under Real…

Talk about the paper: E. Marks, M. Sodano, F. Magistri, L. Wiesmann, D. Desai, R. Marcuzzi, J. Behley, and C. Stachniss, “High Precision Leaf Instance Segmentation in Point Clouds Obtained Under Real Field Conditions,” IEEE Robotics and Automation Letters (RA-L), vol. 8, iss. 8, pp. 4791-4798, 2023. doi:10.1109/LRA.2023.3288383 PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/marks2023ral.pdf CODE: https://github.com/PRBonn/plant_pcd_segmenter VIDEO: https://youtu.be/dvA1SvQ4iEY TALK: https://youtu.be/_k3vpYl-UW0

Trailer: Effectively Detecting Loop Closures using Point Cloud Density Maps

Paper trailer for the work: S. Gupta, T. Guadagnino, B. Mersch, I. Vizzo, and C. Stachniss, “Effectively Detecting Loop Closures using Point Cloud Density Maps,” in Proc. of the IEEE Intl. Conf. on Robotics & Automation (ICRA), 2024. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/gupta2024icra.pdf CODE: https://github.com/PRBonn/MapClosures

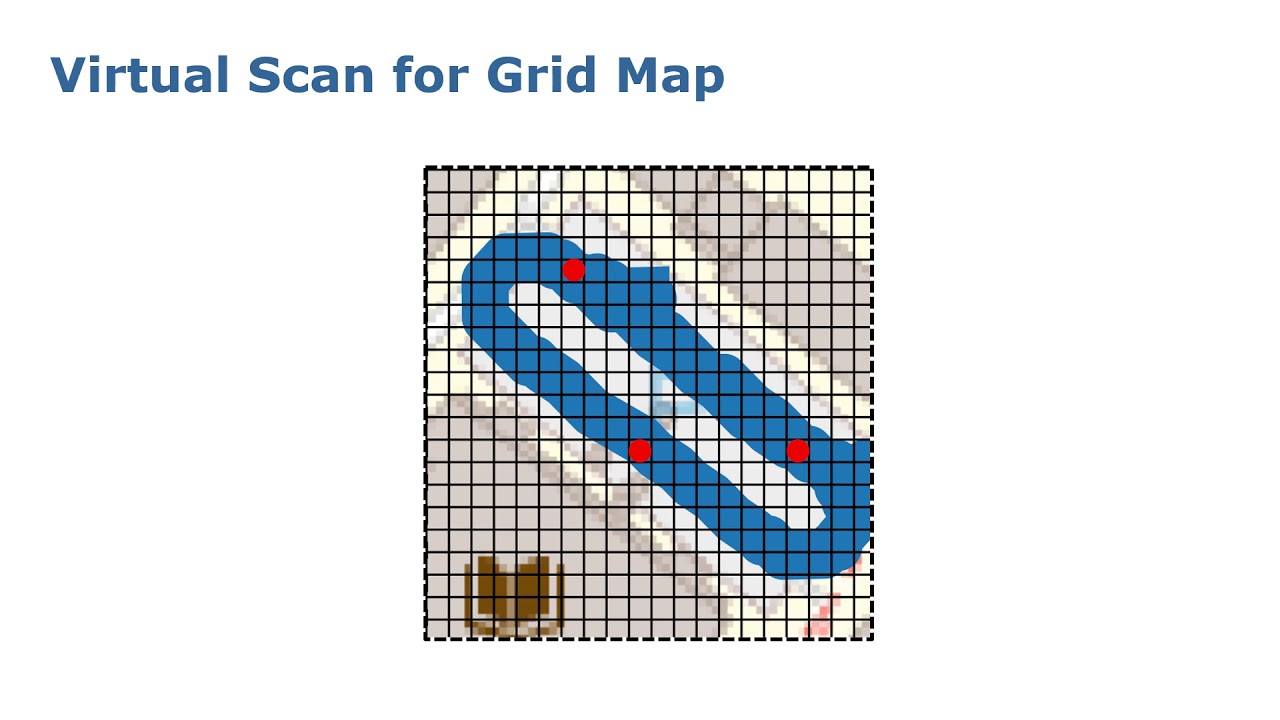

Talk by S. Gupta: Effectively Detecting Loop Closures using Point Cloud Density Maps (ICRA’2024)

Talk at ICRA’2024 about the paper: S. Gupta, T. Guadagnino, B. Mersch, I. Vizzo, and C. Stachniss, “Effectively Detecting Loop Closures using Point Cloud Density Maps,” in Proc. of the IEEE Intl. Conf. on Robotics & Automation (ICRA), 2024. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/gupta2024icra.pdf CODE: https://github.com/PRBonn/MapClosures

Trailer: Unsupervised Pre-Training for 3D Leaf Instance Segmentation (RAL’2023)

Paper trailer about the work: G. Roggiolani, F. Magistri, T. Guadagnino, J. Behley, and C. Stachniss, “Unsupervised Pre-Training for 3D Leaf Instance Segmentation,” IEEE Robotics and Automation Letters (RA-L), vol. 8, pp. 7448-7455, 2023. doi:10.1109/LRA.2023.3320018 PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/roggiolani2023ral.pdf CODE: https://github.com/PRBonn/Unsupervised-Pre-Training-for-3D-Leaf-Instance-Segmentation

Talk by G. Roggiolani: Unsupervised Pre-Training for 3D Leaf Instance Segmentation (RAL-ICRA’24)

Talk about the paper: G. Roggiolani, F. Magistri, T. Guadagnino, J. Behley, and C. Stachniss, “Unsupervised Pre-Training for 3D Leaf Instance Segmentation,” IEEE Robotics and Automation Letters (RA-L), vol. 8, pp. 7448-7455, 2023. doi:10.1109/LRA.2023.3320018 PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/roggiolani2023ral.pdf CODE: https://github.com/PRBonn/Unsupervised-Pre-Training-for-3D-Leaf-Instance-Segmentation

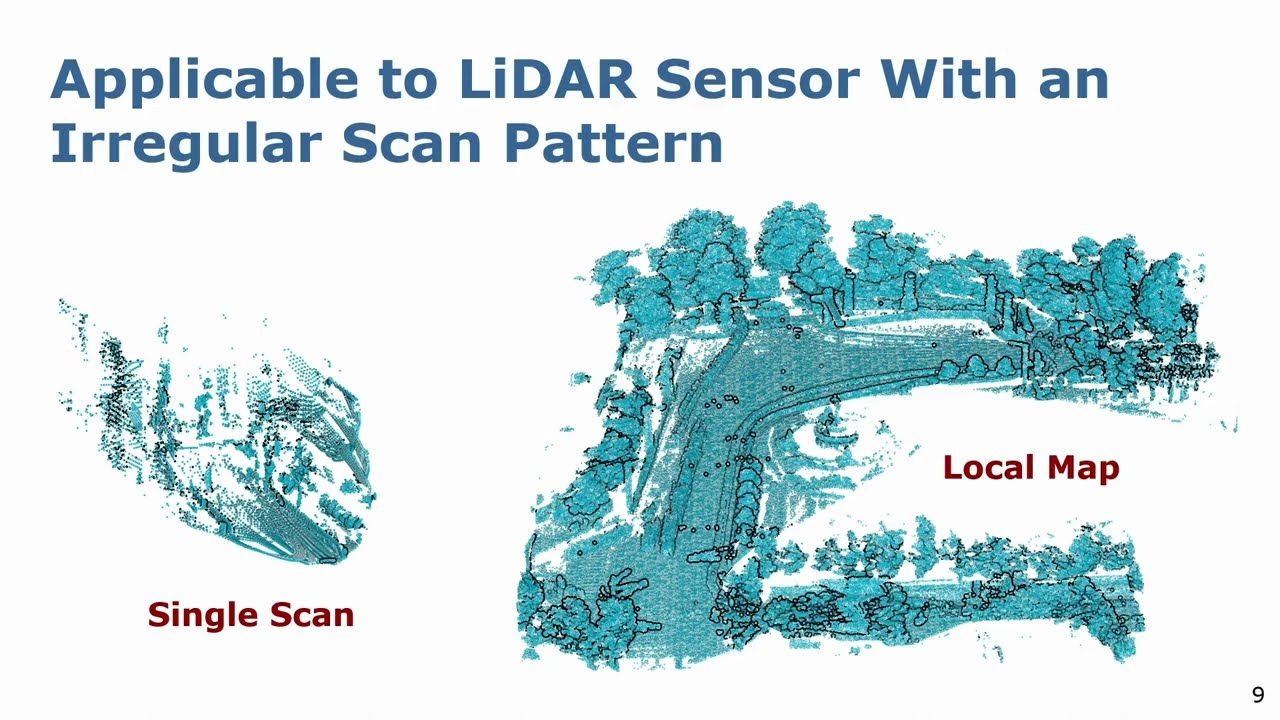

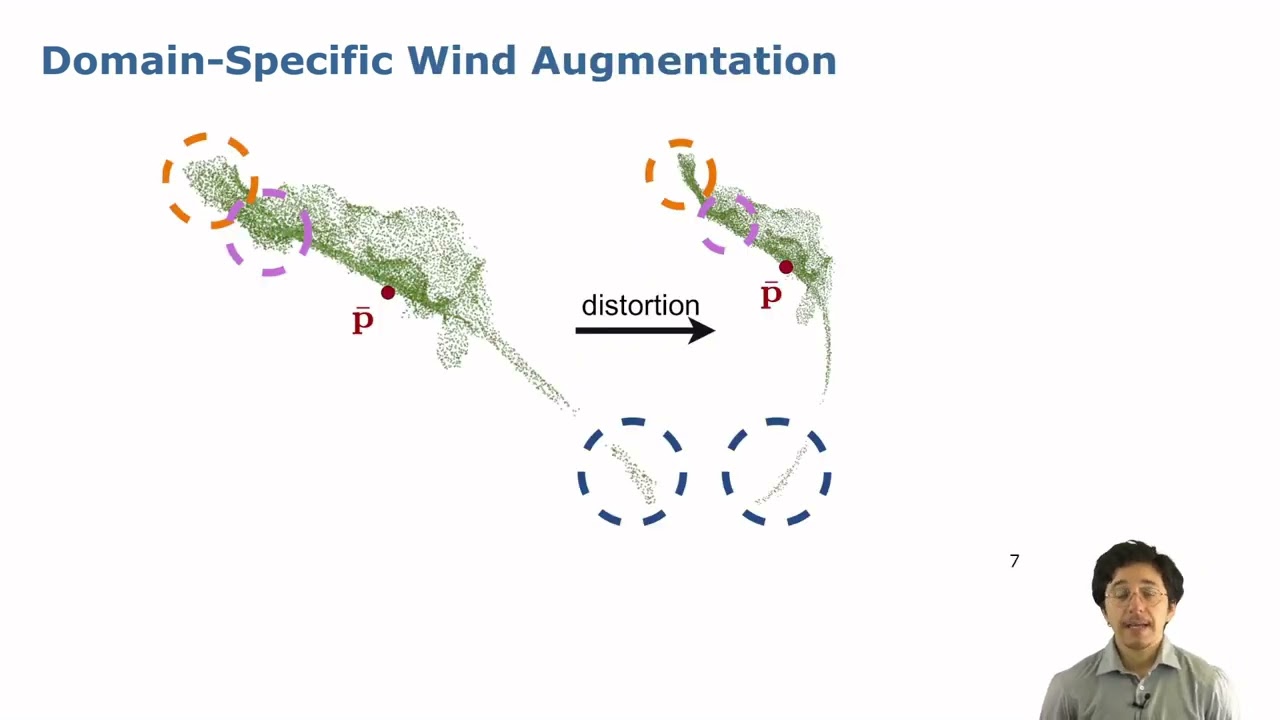

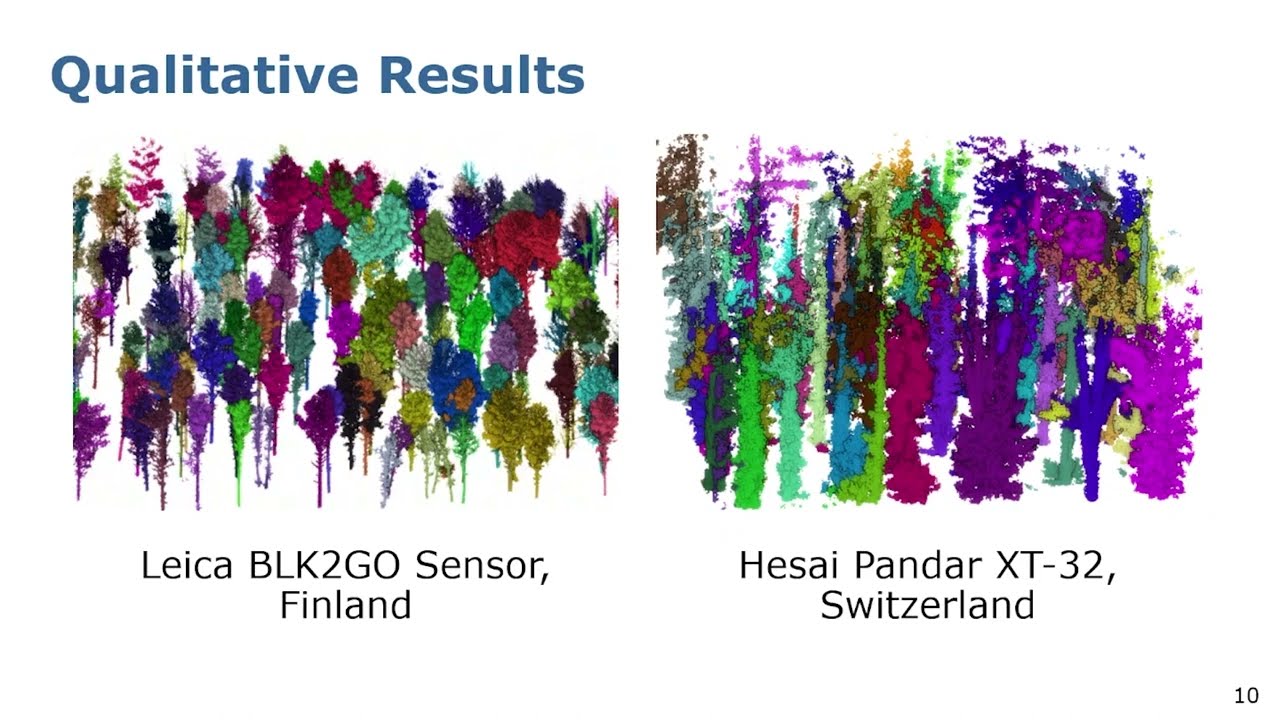

Trailer: Tree Instance Segmentation and Traits Estimation for Forestry Environments… (ICRA’24)

Paper Trailer for the work: M. V. R. Malladi, T. Guadagnino, L. Lobefaro, M. Mattamala, H. Griess, J. Schweier, N. Chebrolu, M. Fallon, J. Behley, and C. Stachniss, “Tree Instance Segmentation and Traits Estimation for Forestry Environments Exploiting LiDAR Data ,” in Proc. of the IEEE Intl. Conf. on Robotics & Automation (ICRA), 2024. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/malladi2024icra.pdf

Talk by M. Malladi: Tree Instance Segmentation and Traits Estimation for Forestry Environments…

ICRA’2024 Talk by Meher Malladi about the paper: M. V. R. Malladi, T. Guadagnino, L. Lobefaro, M. Mattamala, H. Griess, J. Schweier, N. Chebrolu, M. Fallon, J. Behley, and C. Stachniss, “Tree Instance Segmentation and Traits Estimation for Forestry Environments Exploiting LiDAR Data ,” in Proc. of the IEEE Intl. Conf. on Robotics & Automation (ICRA), 2024. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/malladi2024icra.pdf

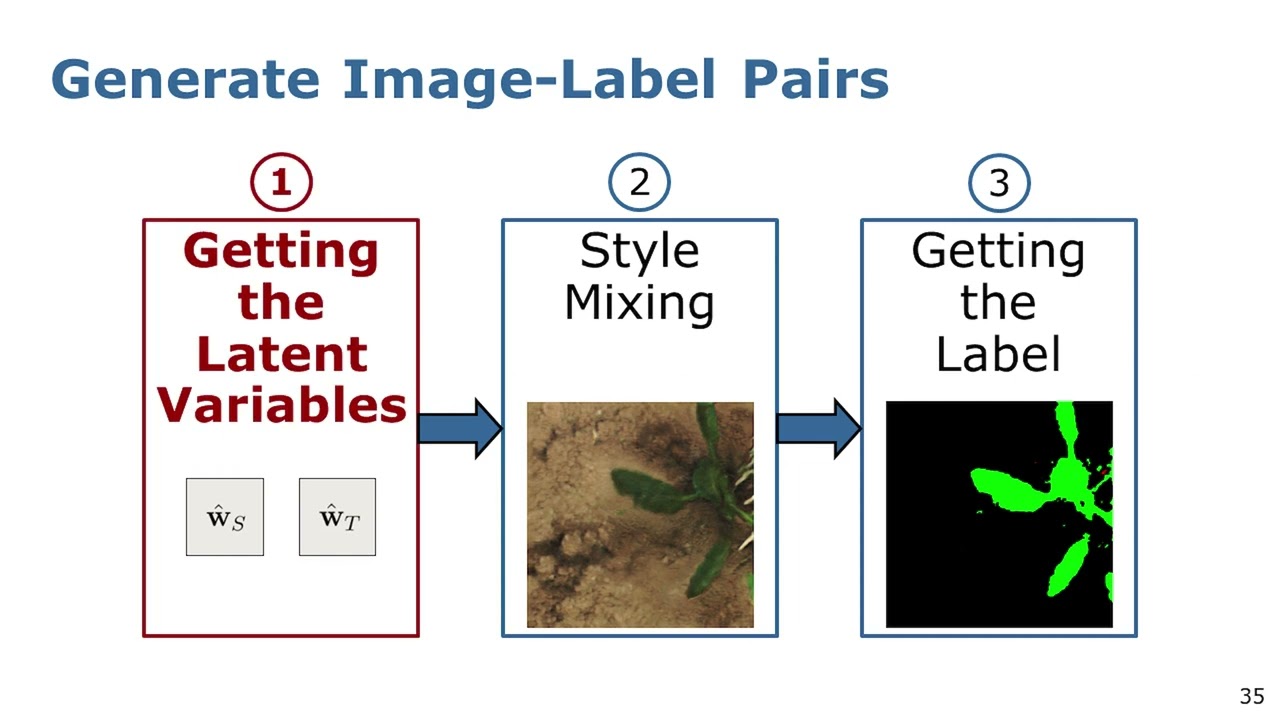

Talk by L. Chong: Unsupervised Generation of Labeled Training Images for Crop-Weed Segmentation…

Talk about the paper: Y. L. Chong, J. Weyler, P. Lottes, J. Behley, and C. Stachniss, “Unsupervised Generation of Labeled Training Images for Crop-Weed Segmentation in New Fields and on Different Robotic Platforms,” IEEE Robotics and Automation Letters (RA-L), vol. 8, iss. 8, p. 5259–5266, 2023. doi:10.1109/LRA.2023.3293356 PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/chong2023ral.pdf CODE: https://github.com/PRBonn/StyleGenForLabels

Trailer: Unsupervised Generation of Labeled Training Images for Crop-Weed Segmentation in New …

Paper trailer for the paper: Y. L. Chong, J. Weyler, P. Lottes, J. Behley, and C. Stachniss, “Unsupervised Generation of Labeled Training Images for Crop-Weed Segmentation in New Fields and on Different Robotic Platforms,” IEEE Robotics and Automation Letters (RA-L), vol. 8, iss. 8, p. 5259–5266, 2023. doi:10.1109/LRA.2023.3293356 PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/chong2023ral.pdf CODE: https://github.com/PRBonn/StyleGenForLabels

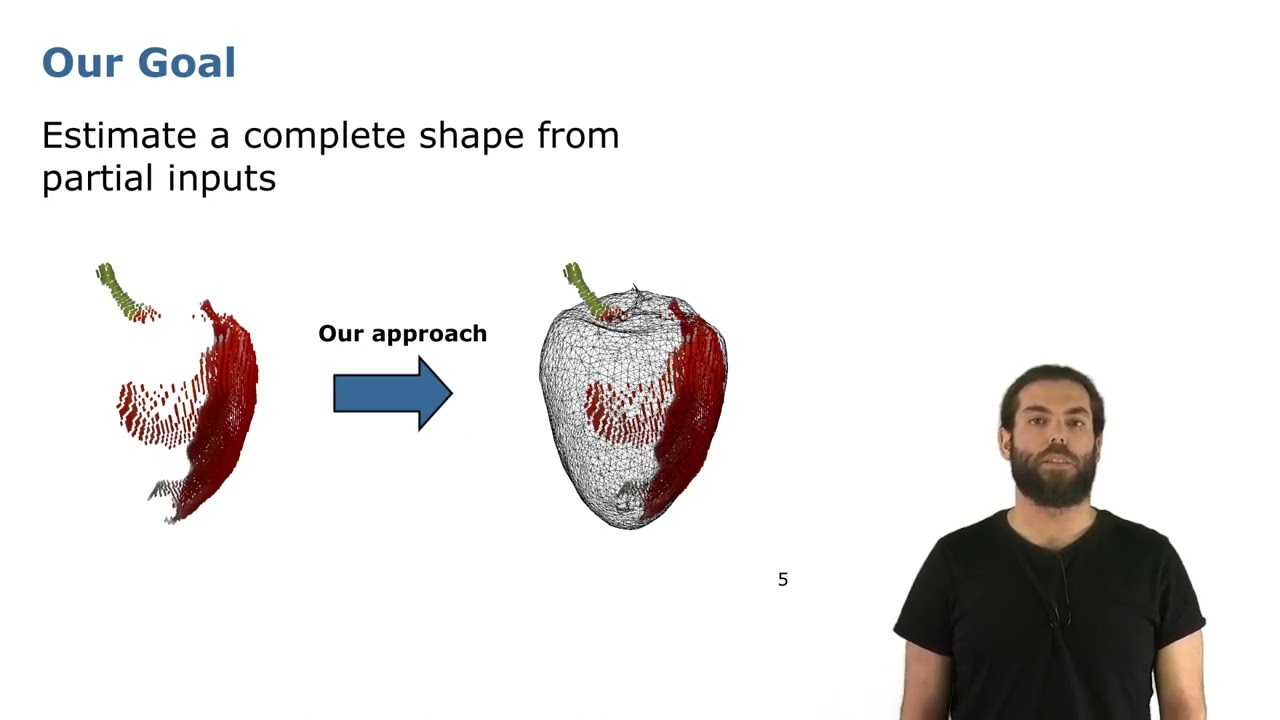

Talk by F. Magistri: Efficient and Accurate Transformer-Based 3D Shape Completion and Reconstruction

ICRA’24 Talk by F. Magistri about the paper: F. Magistri, R. Marcuzzi, E. A. Marks, M. Sodano, J. Behley, and C. Stachniss, “Efficient and Accurate Transformer-Based 3D Shape Completion and Reconstruction of Fruits for Agricultural Robots,” in Proc. of the IEEE Intl. Conf. on Robotics & Automation (ICRA), 2024. Paper Trailer: https://youtu.be/U1xxnUGrVL4 ICRA’24 Talk: https://youtu.be/JKJMEC6zfHE PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/magistri2024icra.pdf Code (to be released soon): https://github.com/PRBonn/TCoRe

Trailer: Efficient and Accurate Transformer-Based 3D Shape Completion and Reconstruction of Fruits..

Paper Trailer for: F. Magistri, R. Marcuzzi, E. A. Marks, M. Sodano, J. Behley, and C. Stachniss, “Efficient and Accurate Transformer-Based 3D Shape Completion and Reconstruction of Fruits for Agricultural Robots,” in Proc. of the IEEE Intl. Conf. on Robotics & Automation (ICRA), 2024. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/magistri2024icra.pdf Code (to be released soon): https://github.com/PRBonn/TCoRe

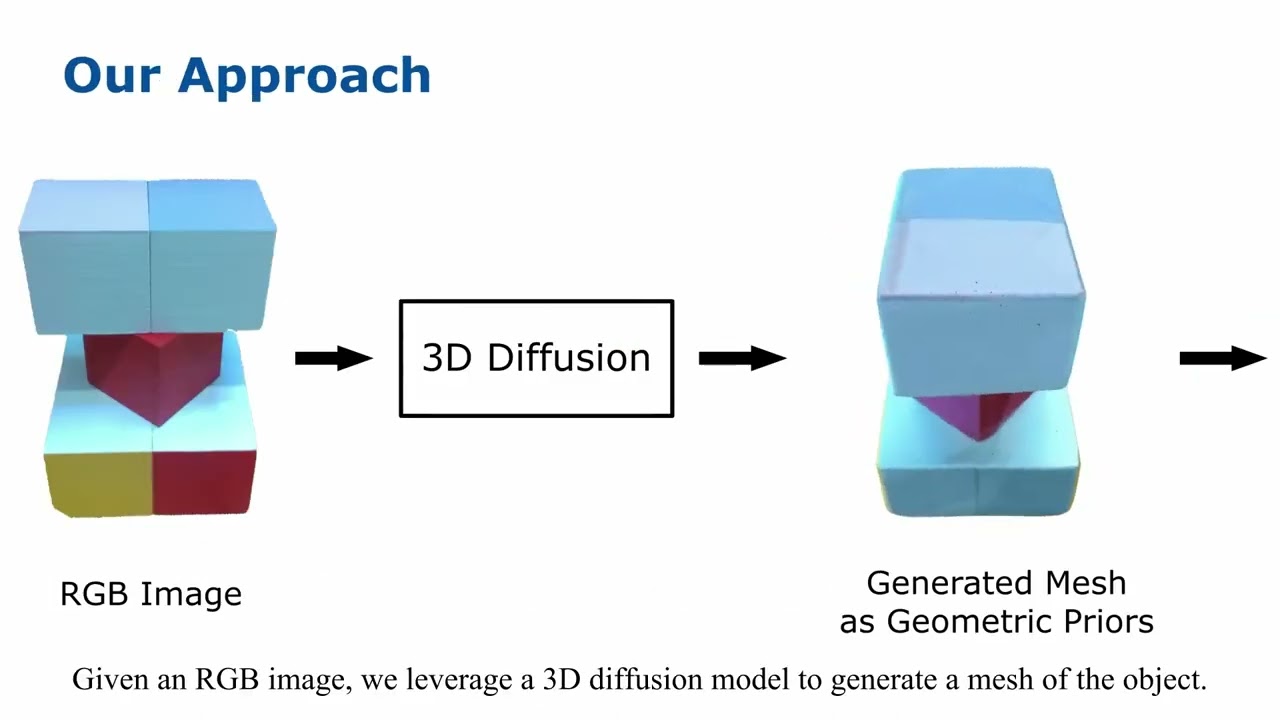

Exploiting Priors from 3D Diffusion Models for RGB-Based One-Shot View Planning

This video demonstrates the work presented in our paper “Exploiting Priors from 3D Diffusion Models for RGB-Based One-Shot View Planning” by S. Pan*, L. Jin*, X. Huang, C. Stachniss, M. Popović, and M. Bennewitz, submitted to IROS 2024 (*equal contribution). Paper link: To reconstruct an initially unknown object, one-shot view planning enables efficient data collection by predicting view configurations and planning the globally shortest path connecting all views at once. However, geometric priors about the object are required to conduct one-shot view planning. In this video, we propose a novel one-shot view planning approach that utilizes the powerful 3D generation capabilities of diffusion models as priors. By incorporating such geometric priors into our pipeline, we achieve effective one-shot view planning starting with only a single RGB image of the object to be reconstructed. The real-world experiments in this video support the claim that our approach balances well between object reconstruction quality and movement cost. code: github.com/psc0628/DM-OSVP

How Many Views Are Needed to Reconstruct an Unknown Object Using NeRF?

This video demonstrates the work presented in our paper “How Many Views Are Needed to Reconstruct an Unknown Object Using NeRF?” by S. Pan*, L. Jin*, H. Hu, M. Popović, and M. Bennewitz, submitted to ICRA 2024 (*equal contribution). Paper link: TBD Neural Radiance Fields (NeRFs) are gaining significant interest for online active object reconstruction due to their exceptional memory efficiency and requirement for only posed RGB inputs. Previous NeRF-based view planning methods exhibit computational inefficiency since they rely on an iterative paradigm, consisting of (1) retraining the NeRF when new images arrive; and (2) planning a path to the next best view only. To address these limitations, in this video, we propose a non-iterative pipeline based on the Prediction of the Required number of Views (PRV). The key idea behind our approach is that the required number of views to reconstruct an object depends on its complexity. The real-world experiments in this video support the claim that our method predicts a suitable required number of views and achieve sufficient NeRF reconstruction quality with a short global path. code: github.com/psc0628/NeRF-PRV

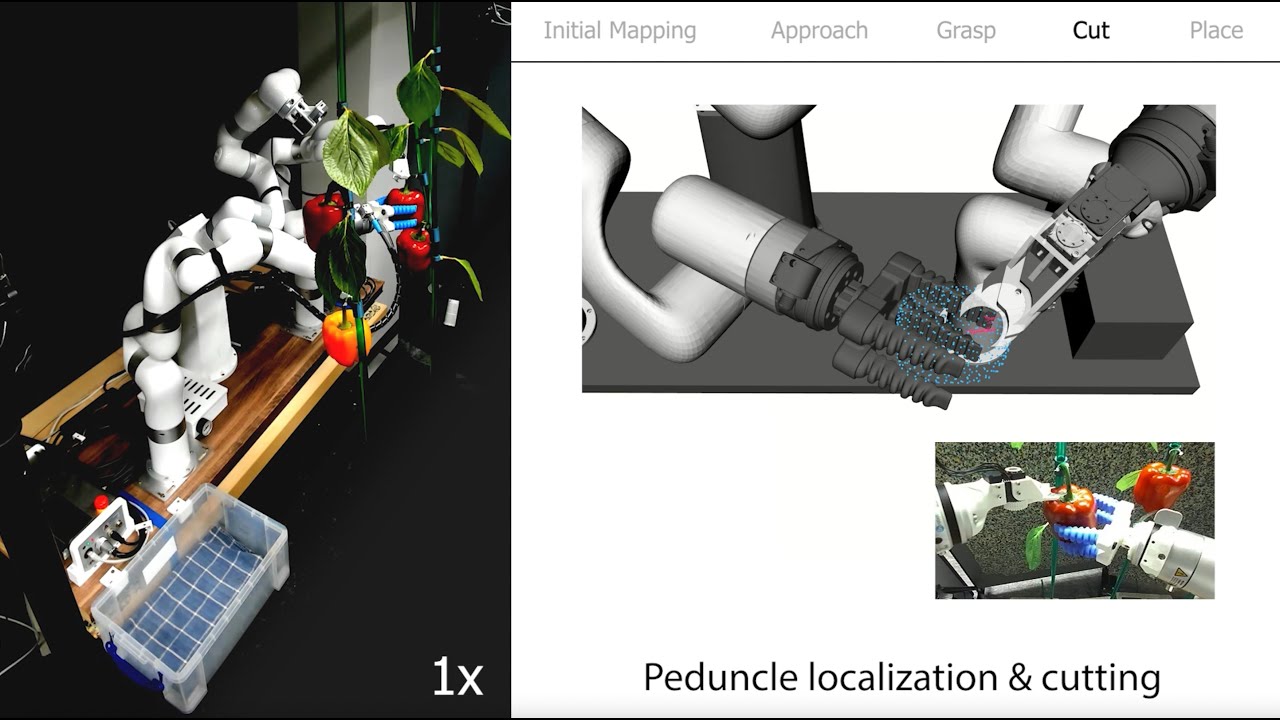

HortiBot: An Adaptive Multi-Arm System for Robotic Horticulture of Sweet Peppers, IROS’24 Submission

Paper Trailer for “HortiBot: An Adaptive Multi-Arm System for Robotic Horticulture of Sweet Peppers” by Christian Lenz, Rohit Menon, Michael Schreiber, Melvin Paul Jacob, Sven Behnke, and Maren Bennewitz submitted to IROS 2024

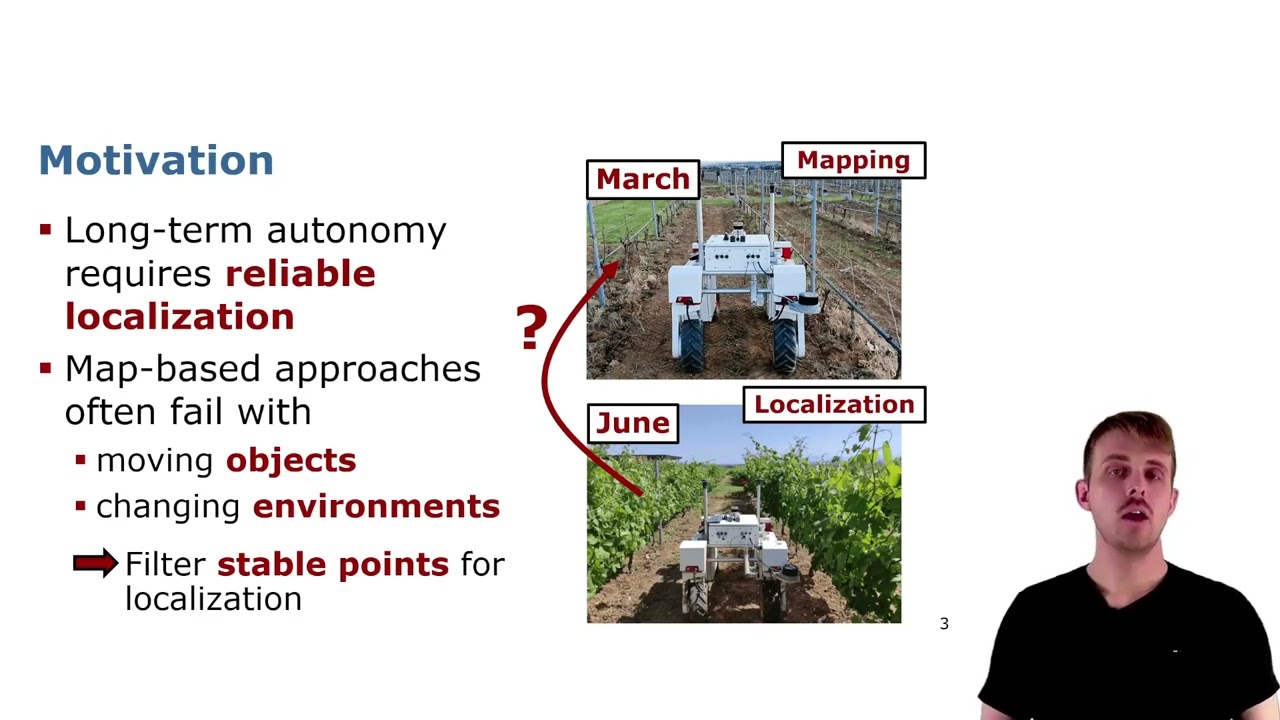

Trailer: Generalizable Stable Points Segmentation for 3D LiDAR Long-Term Localization (RAL’24)

Short Trailer Video for the RAL Paper to be presented at ICRA’2024: I. Hroob, B. Mersch, C. Stachniss, and M. Hanheide, “Generalizable Stable Points Segmentation for 3D LiDAR Scan-to-Map Long-Term Localization,” IEEE Robotics and Automation Letters (RA-L), vol. 9, iss. 4, pp. 3546-3553, 2024. doi:10.1109/LRA.2024.3368236 PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/hroob2024ral.pdf

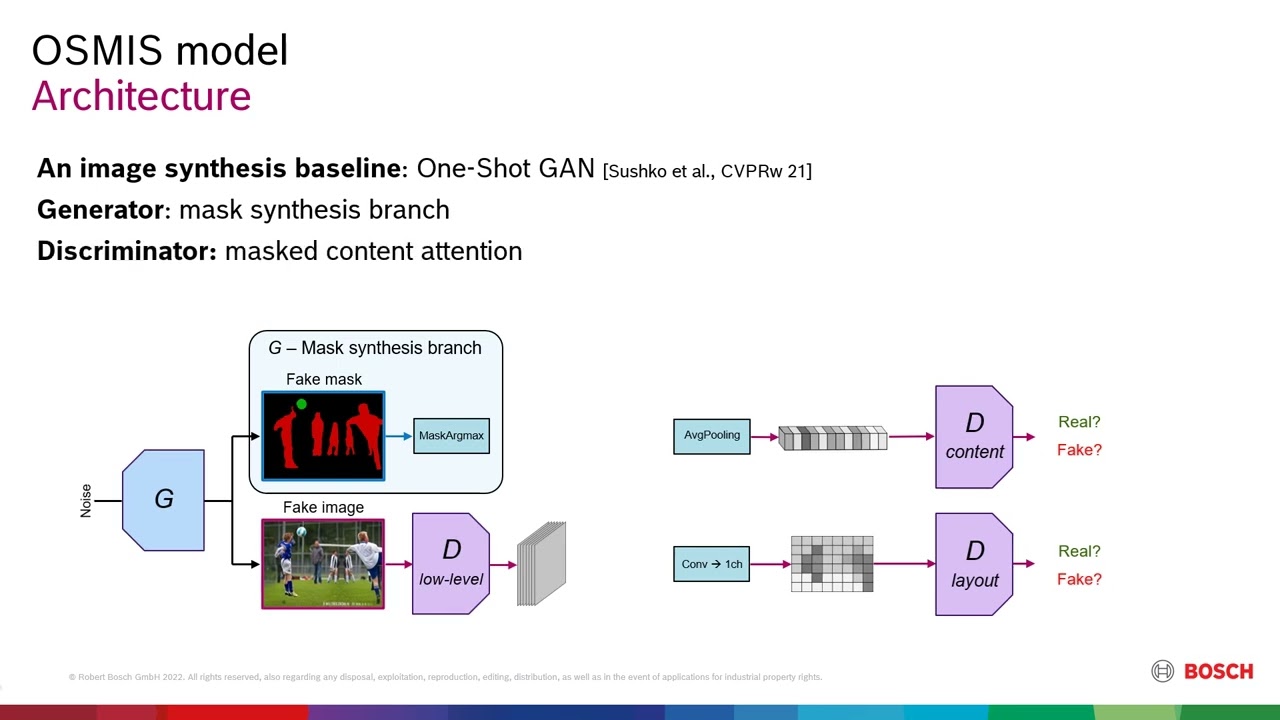

One-Shot Synthesis of Images and Segmentation Masks

Authors: Sushko, Vadim*; Zhang, Dan; Gall, Jürgen; Khoreva, Anna Description: Joint synthesis of images and segmentation masks with generative adversarial networks (GANs) is promising to reduce the effort needed for collecting image data with pixel-wise annotations. However, to learn high-fidelity image-mask synthesis, existing GAN approaches first need a pre-training phase requiring large amounts of image data, which limits their utilization in restricted image domains. In this work, we take a step to reduce this limitation, introducing the task of one-shot image-mask synthesis. We aim to generate diverse images and their segmentation masks given only a single labelled example, and assuming, contrary to previous models, no access to any pre-training data. To this end, inspired by the recent architectural developments of single-image GANs, we introduce our OSMIS model which enables the synthesis of segmentation masks that are precisely aligned to the generated images in the one-shot regime. Besides achieving the high fidelity of generated masks, OSMIS outperforms state-of-the-art single-image GAN models in image synthesis quality and diversity. In addition, despite not using any additional data, OSMIS demonstrates an impressive ability to serve as a source of useful data augmentation for one-shot segmentation applications, providing performance gains that are complementary to standard data augmentation techniques. Code is available at https://github.com/boschresearch/one-shot-synthesis.

Evaluation of multiple spring wheat cultivars in diverse intercropping systems

Madhuri Paul is a PhD student at the Institute Agroecology and Organic Farming, University of Bonn. M. R. Paul, D. T. Demie, S. J. Seidel, and T. F. Döring, “Evaluation of multiple spring wheat cultivars in diverse intercropping systems,” European Journal of Agronomy, vol. 152, p. 127024, 2024. [doi:https://doi.org/10.1016/j.eja.2023.127024]

Effects of spring wheat / faba bean mixtures on early crop development

Madhuri Paul is a PhD student at the Institute Agroecology and Organic Farming, University of Bonn. M. R. Paul, D. T. Demie, S. J. Seidel, and T. F. Döring, “Effects of spring wheat / faba bean mixtures on early crop development,” Plant and Soil, 2023. [doi:10.1007/s11104-023-06111-6]

Image-Coupled Volume Propagation for Stereo Matching by Oh-Hun Kwon and Eduard Zell

This trailer video is based on following publication: O. Kwon and E. Zell, “Image-Coupled Volume Propagation for Stereo Matching,” in 2023 IEEE International Conference on Image Processing (ICIP), 2023, pp. 2510-2514. doi:10.1109/ICIP49359.2023.10222247

DawnIK: Decentralized Collision-Aware Inverse Kinematics Solver for Heterogeneous Multi-Arm Systems

This video demonstrates the work presented in our paper “DawnIK: Decentralized Collision-Aware Inverse Kinematics Solver for Heterogeneous Multi-Arm Systems” by S. Marangoz, R. Menon, N. Dengler, and M. Bennewitz, submitted to IEEE-RAS International Conference on Humanoid Robots (Humanoids), 2023. With collaborative service robots gaining traction, different robotic systems have to work in close proximity. This means that the current inverse kinematics approaches do not have only to avoid collisions with themselves but also collisions with other robot arms. Therefore, we present a novel approach to compute inverse kinematics for serial manipulators that take into account different constraints while trying to reach a desired end-effector pose that avoids collisions with themselves and other arms. We formulate different constraints as weighted cost functions to be optimized by a non-linear optimization solver. Our approach is superior to a state-of-the-art inverse kinematics solver in terms of collision avoidance in the presence of multiple arms in confined spaces with no collisions occurring in all the experimental scenarios. When the probability of collision is low, our approach shows better performance at trajectory tracking as well. Additionally, our approach is capable of simultaneous yet decentralized control of multiple arms for trajectory tracking in intersecting workspace without any collisions. Paper link https://arxiv.org/abs/2307.12750

Graph-Based View Motion Planning for Fruit Detection – IROS23 Paper Presentation

Paper presentation by Tobias Zaenker given at IROS 2023. For more details, have a glance at the paper! Title: “Graph-Based View Motion Planning for Fruit Detection” Full paper link: https://arxiv.org/pdf/2303.03048.pdf website: https://www.hrl.uni-bonn.de/Members/tzaenker/tobias-zaenker

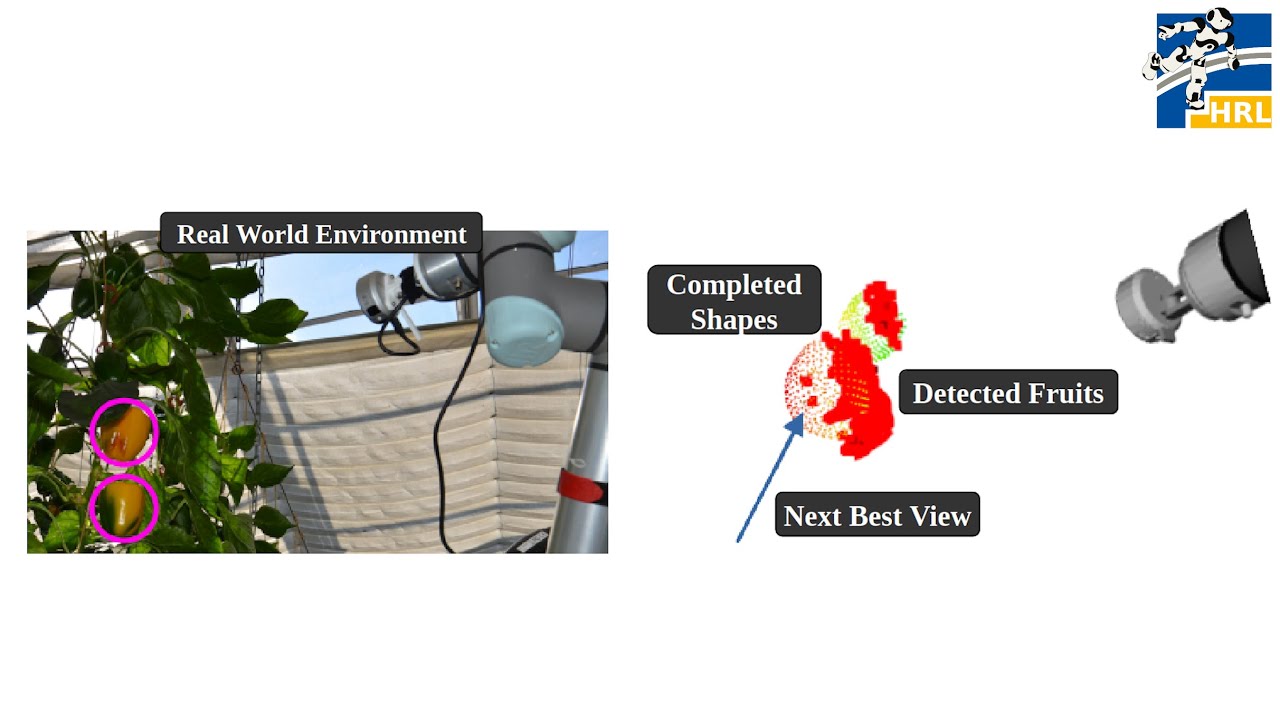

NBV-SC: Next Best View Planning based on Shape Completion – IROS23 Paper Presentation

Paper presentation by Rohit Menon given at IROS 2023. For more details, have a glance at the paper! Title: “NBV-SC: Next Best View Planning based on Shape Completion for Fruit Mapping and Reconstruction” Full paper link: https://arxiv.org/pdf/2209.15376.pdf Institute website: https://www.hrl.uni-bonn.de/Members/menon/rohit-menon

Active Implicit Reconstruction using One-Shot View Planning

This video demonstrates the work presented in our paper “Active Implicit Reconstruction using One-Shot View Planning” by H. Hu*, S. Pan*, L. Jin, M. Popović, and M. Bennewitz, submitted to ICRA 2024 (*equal contribution). Paper link: TBD Active object reconstruction using autonomous robots is gaining great interest. A primary goal in this task is to maximize the information of the object to be reconstructed, given limited on-board resources. Previous view planning methods exhibit inefficiency since they rely on an iterative paradigm based on explicit representations, consisting of (1) planning a path to the next-best view only; and (2) requiring a considerable number of less-gain views in terms of surface coverage. To address these limitations, in this video, we integrated implicit representations into the One-Shot View Planning (OSVP) that directly predict a set of views. The key idea behind our approach is to use implicit representations to obtain the small missing surface areas instead of observing them with extra views. The real-world comparative experiments in this video support the claim that our method achieves sufficient reconstruction quality within less movement costs under the same view budget compared to explicit NBV method. code: github.com/psc0628/AIR-OSVP

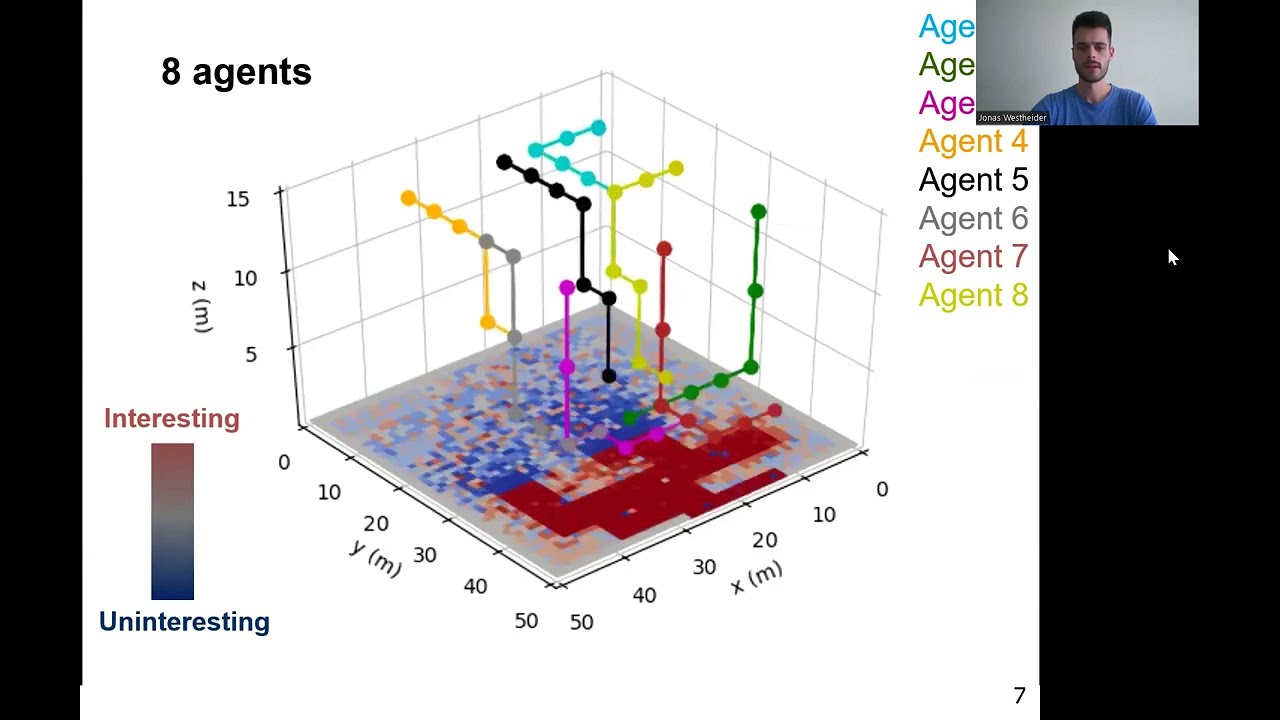

Multi-UAV Adaptive Path Planning Using Deep Reinforcement Learning

Jonas Westheider is a PhD Student at the Institute of Geodesy and Geoinformation (IGG), University of Bonn. Westheider, J., Rückin, J., & Popović, M., “Multi-UAV Adaptive Path Planning Using Deep Reinforcement Learning”. arXiv preprint arXiv:2303.01150. doi: https://doi.org/10.1016/j.robot.2022.104288

Talk by Y. Pan: Panoptic Mapping with Fruit Completion and Pose Estimation … (IROS’23)

IROS’23 Talk for the paper: Y. Pan, F. Magistri, T. Läbe, E. Marks, C. Smitt, C. S. McCool, J. Behley, and C. Stachniss, “Panoptic Mapping with Fruit Completion and Pose Estimation for Horticultural Robots,” in Proc. of the IEEE/RSJ Intl. Conf. on Intelligent Robots and Systems (IROS), 2023. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/pan2023iros.pdf CODE: https://github.com/PRBonn/HortiMapping

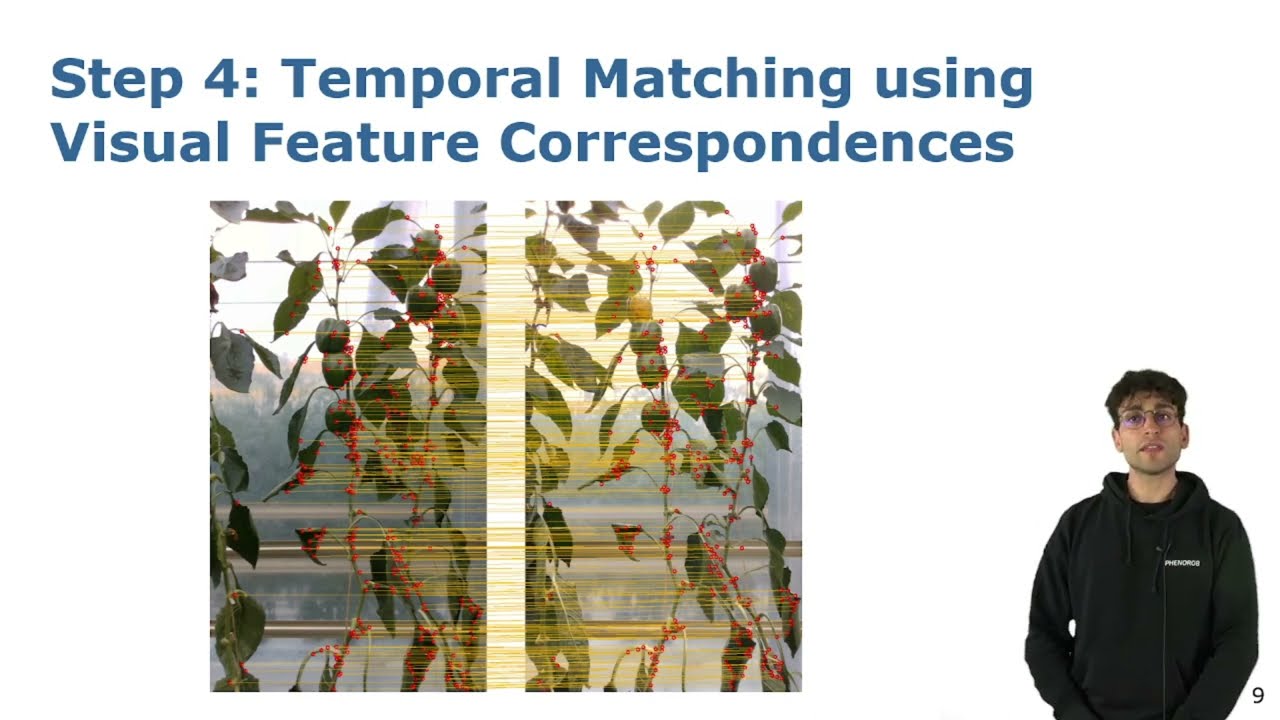

Talk by L. Lobefaro: Estimating 4D Data Associations Towards Spatial-Temporal Mapping … (IROS’23)

IROS’23 Talk for the paper: L. Lobefaro, M. V. R. Malladi, O. Vysotska, T. Guadagnino, and C. Stachniss, “Estimating 4D Data Associations Towards Spatial-Temporal Mapping of Growing Plants for Agricultural Robots,” in Proc. of the IEEE/RSJ Intl. Conf. on Intelligent Robots and Systems (IROS), 2023. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/lobefaro2023iros.pdf CODE: https://github.com/PRBonn/plants_temporal_matcher

Talk by I. Vizzo: KISS-ICP: In Defense of Point-to-Point ICP (RAL-IROS’23)

RAL-IROS’23 Talk for the paper: Vizzo, T. Guadagnino, B. Mersch, L. Wiesmann, J. Behley, and C. Stachniss, “KISS-ICP: In Defense of Point-to-Point ICP – Simple, Accurate, and Robust Registration If Done the Right Way,” IEEE Robotics and Automation Letters (RA-L), vol. 8, iss. 2, pp. 1-8, 2023. doi:10.1109/LRA.2023.3236571 PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/vizzo2023ral.pdf CODE: https://github.com/PRBonn/kiss-icp

Adoption and diffusion of digital farming technologies

Dr. Sebastian Rasch is a Postdoctoral Researcher at the Institute for Food and Resource Economics (ILR), University of Bonn. Shang, L., Heckelei, T., Gerullis, M. K., Börner, J., & Rasch, S. 2021. Adoption and diffusion of digital farming technologies – integrating farm-level evidence and system interaction. Agricultural Systems, 190, 103074. doi: https://doi.org/10.1016/j.agsy.2021.103074

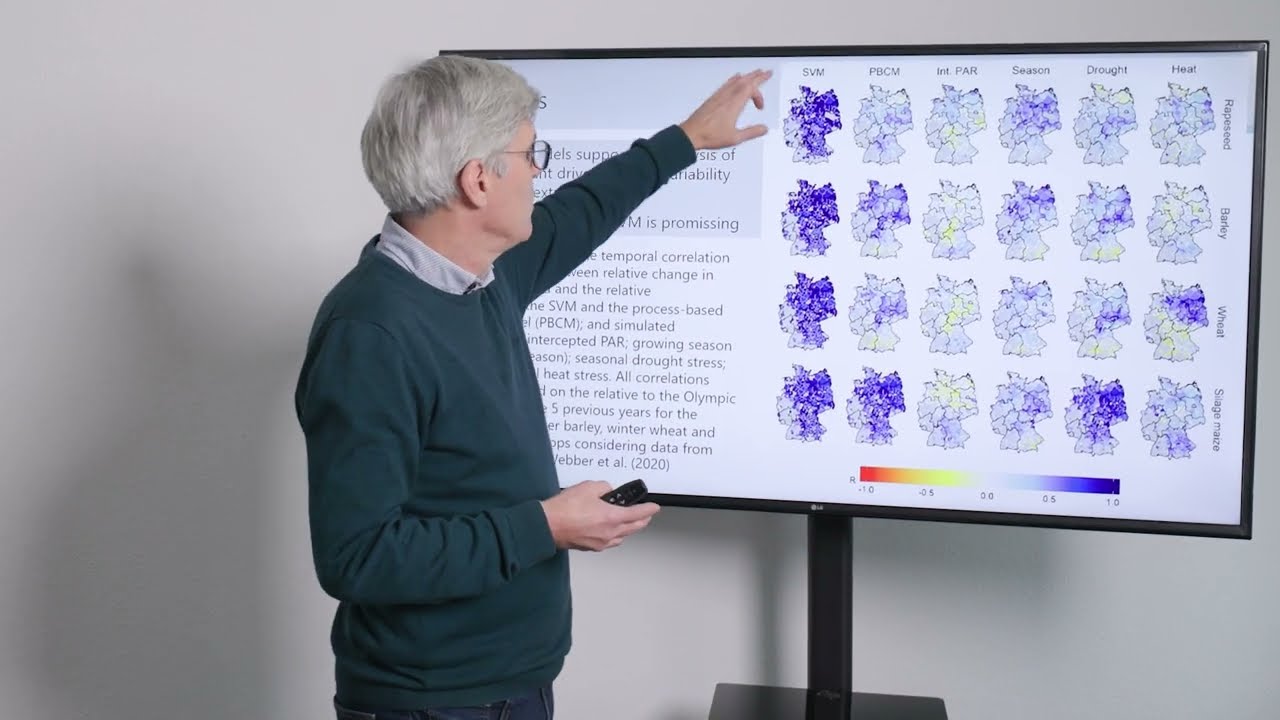

Estimating yield responses to climate variability and extreme events

Prof. Dr. Frank Ewert is Professor and head of the Crop Science Group, Institute of Crop Science and Resource Conservation (INRES) University of Bonn and Scientific Director of Leibniz Centre for Agricultural Landscape Research (ZALF) Webber H, Lischeid G, Sommer M, Finger R, Nendel C, Gaiser T, Ewert F. 2020. No perfect storm for crop yield failure in Germany. Environmental Research Letters 15 (10): 104012. doi: https://iopscience.iop.org/article/10.1088/1748-9326/aba2a4 Lischeid G, Webber H, Sommer M, Nendel C, Ewert F. 2022. Machine learning in crop yield modelling: A powerful tool, but no surrogate for science. Agricultural and Forest Meteorology 312, 108698. doi: https://doi.org/10.1016/j.agrformet.2021.108698

PermutoSDF: Fast Multi-View Reconstruction with Implicit Surfaces using Permutohedral Lattices

Neural radiance-density field methods have become increasingly popular for the task of novel-view rendering. Their recent extension to hash-based positional encoding ensures fast training and inference with visually pleasing results. However, density-based methods struggle with recovering accurate surface geometry. Hybrid methods alleviate this issue by optimizing the density based on an underlying SDF. However, current SDF methods are overly smooth and miss fine geometric details. In this work, we combine the strengths of these two lines of work in a novel hash-based implicit surface representation. We propose improvements to the two areas by replacing the voxel hash encoding with a permutohedral lattice which optimizes faster, especially for higher dimensions. We additionally propose a regularization scheme which is crucial for recovering high-frequency geometric detail. We evaluate our method on multiple datasets and show that we can recover geometric detail at the level of pores and wrinkles while using only RGB images for supervision. Furthermore, using sphere tracing we can render novel views at 30 fps on an RTX 3090. The paper, animations, and code is available at https://radualexandru.github.io/permuto_sdf

Explicitly Incorporating Spatial Information to Recurrent Networks for Agriculture

Claus Smitt is PhD Student at the Agricultural Robotics & Engineering department of the University of Bonn. Smitt, C., Halstead, M., Ahmadi, A., and McCool, C. , “Explicitly incorporating spatial information to recurrent networks for agriculture”. IEEE Robotics and Automation Letters, 7(4), 10017-10024. doi: 10.48550/arXiv.2206.13406

NBV-SC: Next Best View Planning based on Shape Completion for Fruit Mapping and Reconstruction

This video demonstrates the work presented in our paper “NBV-SC: Next Best View Planning based on Shape Completion for Fruit Mapping and Reconstruction” by R. Menon, T. Zaenker, N. Dengler and M. Bennewitz, submitted to the International Conference on Intelligent Robots and Systems (IROS), 2023. State-of-the-art viewpoint planning approaches utilize computationally expensive ray casting operations to find the next best viewpoint. In our paper, we present a novel viewpoint planning approach that explicitly uses information about the predicted fruit shapes to compute targeted viewpoints that observe as yet unobserved parts of the fruits. Furthermore, we formulate the concept of viewpoint dissimilarity to reduce the sampling space for more efficient selection of useful, dissimilar viewpoints. In comparative experiments with a state-of-the-art viewpoint planner, we demonstrate improvement not only in the estimation of the fruit sizes, but also in their reconstruction, while significantly reducing the planning time. Finally, we show the viability of our approach for mapping sweet peppers plants with a real robotic system in a commercial glasshouse. Paper link https://arxiv.org/abs/2209.15376

Talk by A. Riccardi: Fruit Tracking Over Time Using High-Precision Point Clouds (ICRA’23)

ICRA’23 Talk about the paper: A. Riccardi, S. Kelly, E. Marks, F. Magistri, T. Guadagnino, J. Behley, M. Bennewitz, and C. Stachniss, “Fruit Tracking Over Time Using High-Precision Point Clouds,” in Proc. of the IEEE Intl. Conf. on Robotics & Automation (ICRA), 2023. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/riccardi2023icra.pdf

Talk by S. Li: Multi-scale Interaction for Real-time LiDAR Data Segmentation … (RAL-ICRA’23)

ICRA’23 Talk about the paper: S. Li, X. Chen, Y. Liu, D. Dai, C. Stachniss, and J. Gall, “Multi-scale Interaction for Real-time LiDAR Data Segmentation on an Embedded Platform,” IEEE Robotics and Automation Letters (RA-L), vol. 7, iss. 2, pp. 738-745, 2022. doi:10.1109/LRA.2021.3132059 PAPER: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/li2022ral.pdf CODE: https://github.com/sj-li/MINet

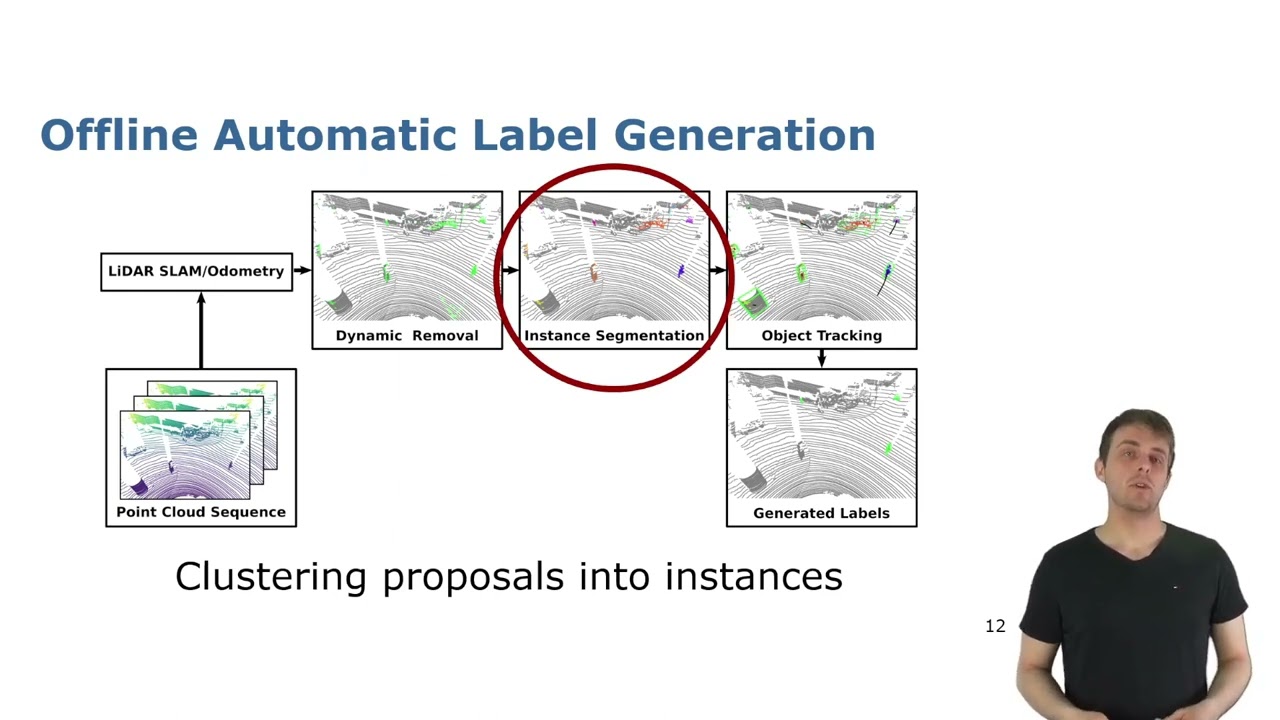

Talk by B. Mersch: Automatic Labeling to Generate Training Data for Online LiDAR MOS (RAL-ICRA’23)

ICRA 2023 Talk for the paper: X. Chen, B. Mersch, L. Nunes, R. Marcuzzi, I. Vizzo, J. Behley, and C. Stachniss, “Automatic Labeling to Generate Training Data for Online LiDAR-Based Moving Object Segmentation,” IEEE Robotics and Automation Letters (RA-L), vol. 7, iss. 3, pp. 6107-6114, 2022. doi:10.1109/LRA.2022.3166544 PDF: http://arxiv.org/pdf/2201.04501 CODE: https://github.com/PRBonn/auto-mos

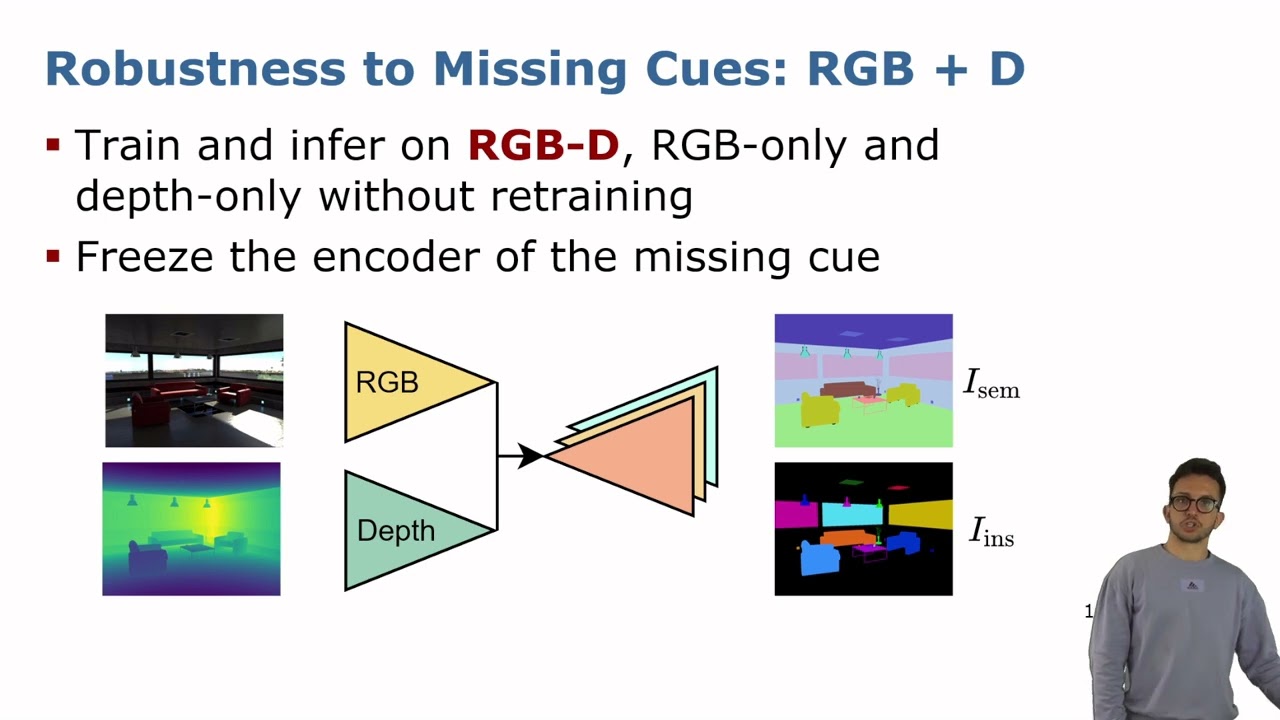

Talk by M. Sodano: Robust Double-Encoder Network for RGB-D Panoptic Segmentation, (ICRA’23)

ICRA’23 Talk about the paper: M. Sodano, F. Magistri, T. Guadagnino, J. Behley, and C. Stachniss, “Robust Double-Encoder Network for RGB-D Panoptic Segmentation,” in Proc. of the IEEE Intl. Conf. on Robotics & Automation (ICRA), 2023. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/sodano2023icra.pdf CODE: https://github.com/PRBonn/PS-res-excite

Talk by S. Kelly: TAIM – Target-Aware Implicit Mapping for Agricultural Crop Inspection (ICRA’23)

ICRA 2023 Talk by Shane Kelly for the paper: S. Kelly, A. Riccardi, E. Marks, F. Magistri, T. Guadagnino, M. Chli, and C. Stachniss, “Target-Aware Implicit Mapping for Agricultural Crop Inspection,” in Proc. of the IEEE Intl. Conf. on Robotics & Automation (ICRA), 2023.

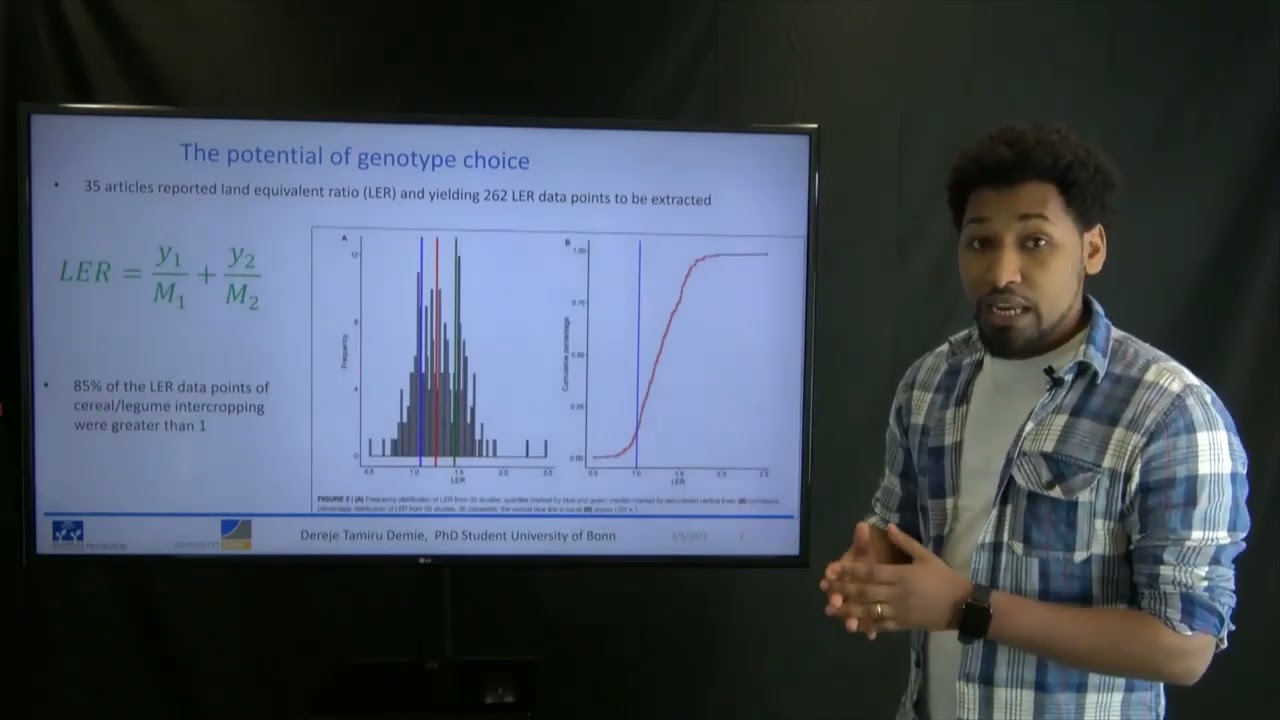

Mixture X Genotype Effects in Cereal/Legume Intercropping by Demie et al.

This short trailer is based on the following publication: D. Demie, T. Döring, M. Finckh, W. van der Werf, J. Enjalbert, and S. Seidel, “Mixture X Genotype Effects in Cereal/Legume Intercropping,” Frontiers in Plant Science, vol. 13, 2022. doi:10.3389/fpls.2022.846720 Full text available here: https://www.frontiersin.org/articles/10.3389/fpls.2022.846720/full

On Domain-Specific Pre-Training for Effective Semantic Perception in Agricult. Robotics (Roggiolani)

This short trailer is based on the following publication: G. Roggiolani, F. Magistri, T. Guadagnino, G. Grisetti, C. Stachniss, and J. Behley, “On Domain-Specific Pre-Training for Effective Semantic Perception in Agricultural Robotics,” Proceedings of the IEEE International Conference on Robotics & Automation (ICRA), 2023.

Hierarchical Approach for Joint Semantic, Plant & Leaf Instance Segmentation in the Agricult. Domain

This short trailer is based on the following publication: G. Roggiolani, M. Sodano, F. Magistri, T. Guadagnino, J. Behley, and C. Stachniss, “Hierarchical Approach for Joint Semantic, Plant Instance, and Leaf Instance Segmentation in the Agricultural Domain,” in Proceedings of the IEEE International Conference on Robotics & Automation (ICRA), 2023.

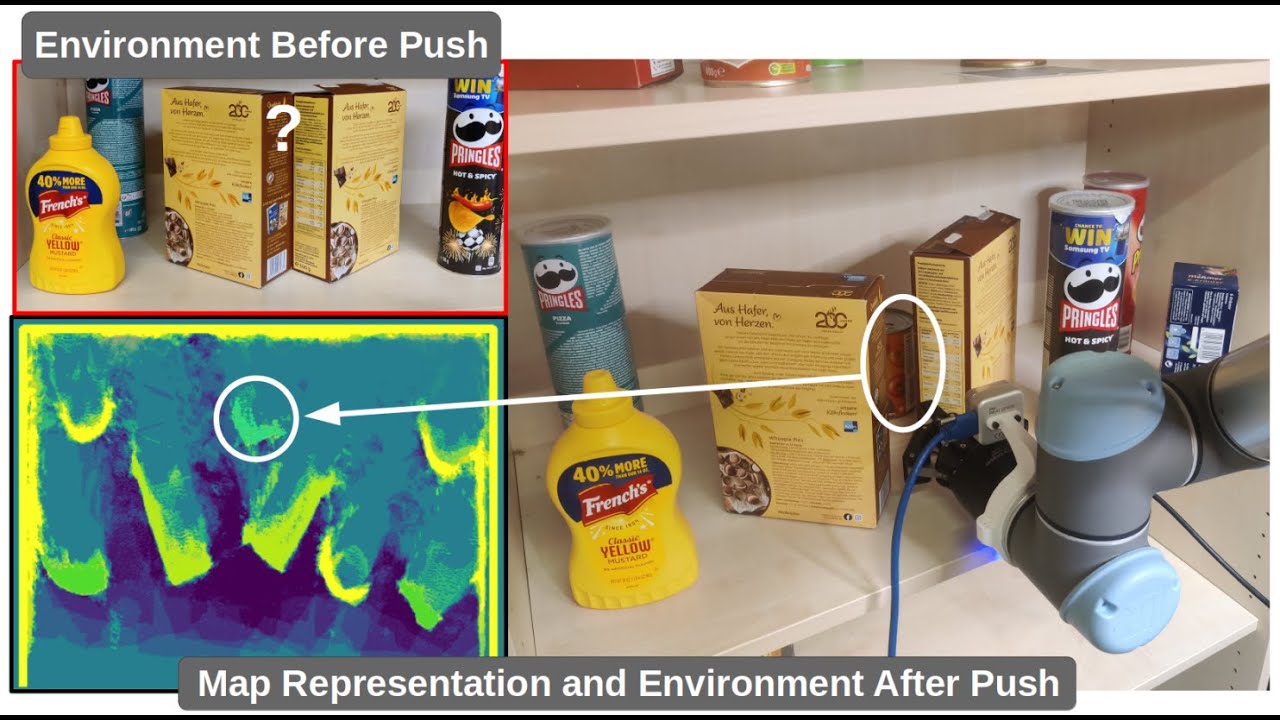

Viewpoint Push Planning for Mapping of Unknown Confined Spaces

This video demonstrates the work presented in our paper “Viewpoint Push Planning for Mapping of Unknown Confined Spaces” by N. Dengler, S. Pan, V. Kalagaturu, R. Menon, M. Dawood and Maren Bennewitz, submitted to the International Conference on Intelligent Robots and Systems (IROS), 2023. Paper link: https://arxiv.org/pdf/2303.03126.pdf The mapping of confined spaces such as shelves is an especially challenging task in the domain of viewpoint planning, since objects occlude each other and the scene can only be observed from the front, thus with limited possible viewpoints. In this video, we show our deep reinforcement learning framework that generates promising views aiming at reducing the map entropy. Additionally, the pipeline extends standard viewpoint planning by predicting adequate minimally invasive push actions to uncover occluded objects and increase the visible space. Using a 2.5D occupancy height map as state representation that can be efficiently updated, our system decides whether to plan a new viewpoint or perform a push. As the real-world experimental results with a robotic arm show, our system is able to significantly increase the mapped space compared to different baselines, while the executed push actions highly benefit the viewpoint planner with only minor changes to the object configuration. Code: https://github.com/NilsDengler/view-point-pushing

Graph-based View Motion Planning for Fruit Detection

This video demonstrates the work presented in our paper “Graph-based View Motion Planning for Fruit Detection” by T. Zaenker, J. Rückin, R. Menon, M. Popović, and M. Bennewitz, submitted to the International Conference on Intelligent Robots and Systems (IROS), 2023. Paper link: https://arxiv.org/abs/2303.03048 The view motion planner generates view pose candidates from targets to find new and cover partially detected fruits and connects them to create a graph of efficiently reachable and information-rich poses. That graph is searched to obtain the path with the highest estimated information gain and updated with the collected observations to adaptively target new fruit clusters. Therefore, it can explore segments in a structured way to optimize fruit coverage with a limited time budget. The video shows the planner applied in a commercial glasshouse environment and in a simulation designed to mimic our real-world setup, which we used to evaluate the performance. Code: https://github.com/Eruvae/view_motion_planner

Crop Agnostic Monitoring Using Deep Learning

Prof. Dr. Chris McCool is Professor of Applied Computer Vision and Robotic Vision, head of the Agricultural Robotics and Engineering department at the University of Bonn M. Halstead, A. Ahmadi, C. Smitt, O. Schmittmann, C. McCool, “Crop Agnostic Monitoring Driven by Deep Learning”, in Front. Plant Sci. 12:786702. doi: 10.3389/fpls.2021.786702

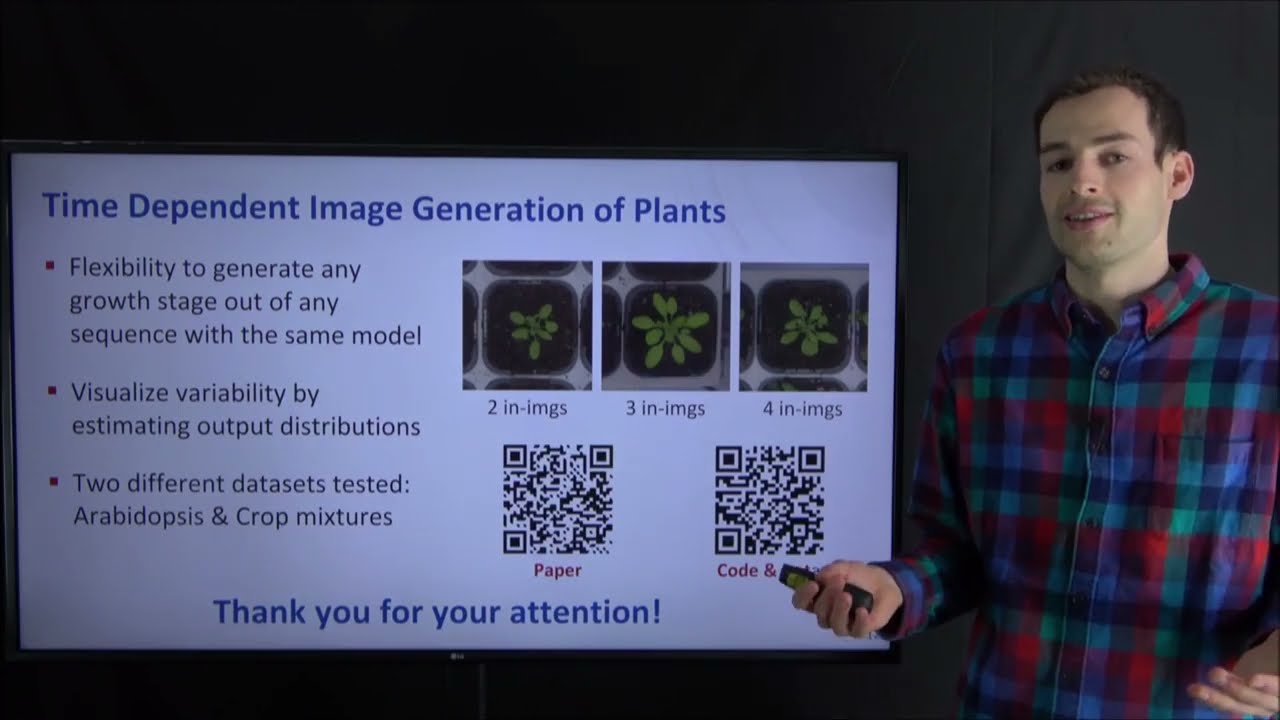

Time Dependent Image Generation of Plants from Incomplete Sequences with CNN-Transformer by L. Drees

This short trailer is based on the following publication: L. Drees, I. Weber, M. Russwurm, and R. Roscher, “Time Dependent Image Generation of Plants from Incomplete Sequences with CNN-Transformer,” in DAGM German Conference on Pattern Recognition , 2022, pp. 495-510. doi:https://doi.org/10.1007/978-3-031-16788-1_30 Full text available here: https://link.springer.com/chapter/10.1007/978-3-031-16788-1_30

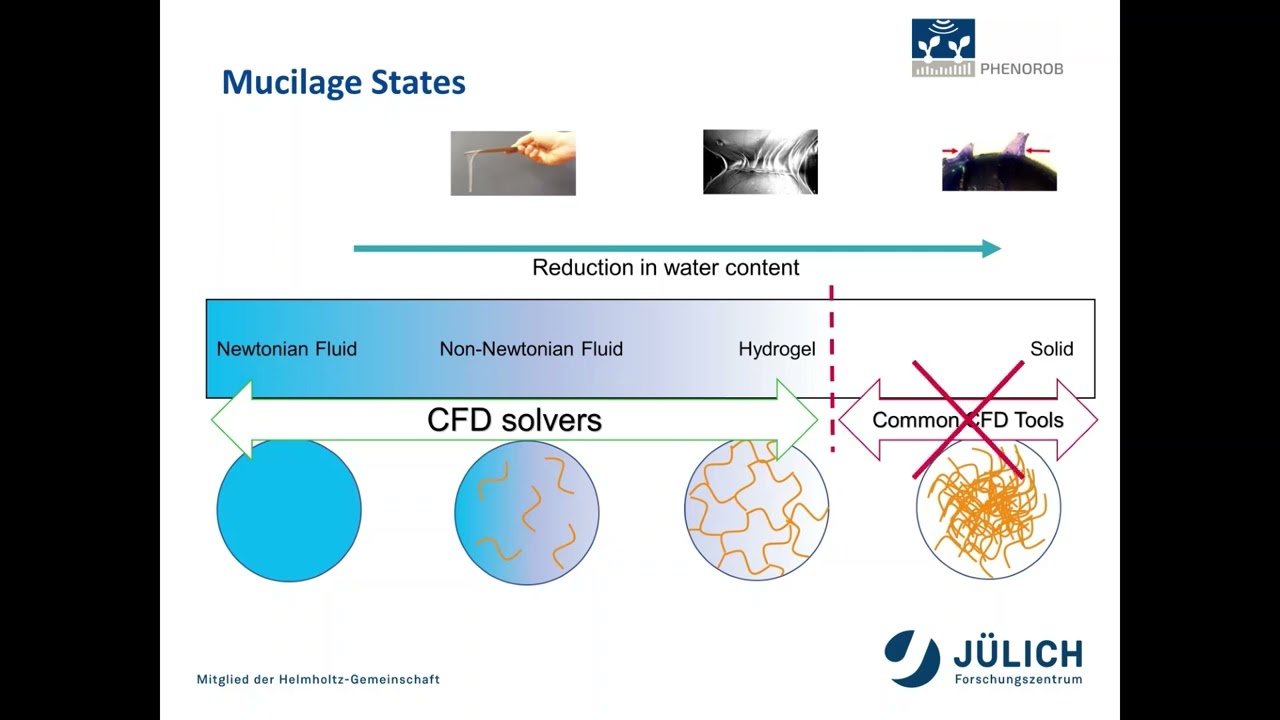

Pore-scale simulation of mucilage drainage by Omid Esmaeelipoor Jahromi et al.

This short trailer video is based on the following publication: O. Esmaeelipoor Jahromi, M. Knott, R. K. Janakiram, R. Rahim, and E. Kroener, “Pore-scale simulation of mucilage drainage,” Vadose Zone Journal, vol. e20218, pp. 1-13, 2022. doi:10.1002/vzj2.20218

Controlled Multi-modal Image Generation for Plant Growth Modeling

Prof. Dr. Ribana Roscher is Professor of Data Science for Crop Systems, Institute of Bio- and Geosciences (IBG-2) at Forschungszentrum Jülich and Institute of Geodesy and Geoinformation (IGG), University of Bonn M. Miranda, L. Drees and R. Roscher, “Controlled Multi-modal Image Generation for Plant Growth Modeling,” in 2022 26th International Conference on Pattern Recognition (ICPR), Montreal, QC, Canada, 2022 pp. 5118-5124. doi: 10.1109/ICPR56361.2022.9956115

Large-eddy Simulation of Soil Moisture Heterogeneity Induced Secondary Circulation with Ambient Wind

This video is based on the following publication: L. Zhang, S. Poll, and S. Kollet, “Large-eddy Simulation of Soil Moisture Heterogeneity Induced Secondary Circulation with Ambient Winds,” Quarterly Journal of the Royal Meteorological Society, 2022. doi:10.5194/egusphere-egu22-5533

Hypermap Mapping Framework and its Application to Autonomous Semantic Exploration by T Zaenker et al

This paper trailer video is based on the following publication: T. Zaenker, F. Verdoja, and V. Kyrki, “Hypermap Mapping Framework and its Application to Autonomous Semantic Exploration,” in 2020 IEEE Conference on Multisensor Fusion and Integration, 2020. To learn more, check out the full publication here: https://acris.aalto.fi/ws/portalfiles/portal/53865812/ELEC_Zaenker_etal_Hypermap_Mapping_Framework_MFI2020_acceptedauthormanuscript.pdf

Innovation context & technology traits explain heterogeneity across studies of agri. tech. adoption

This PhenoRob paper trailer is based on the following publication: Schulz, Dario & Börner, Jan, “Innovation context and technology traits explain heterogeneity across studies of agricultural technology adoption: A meta-analysis,” Journal of Agricultural Economics, 2022. DOI: 10.1111/1477-9552.12521.

Explicitly Incorporating Spatial Information to Recurrent Networks for Agriculture

IROS 2022 Best Paper Award on Agri-Robotics – Sponsored by YANMAR “Explicitly Incorporating Spatial Information to Recurrent Networks for Agriculture,” by Claus Smitt, Michael Allan Halstead, Alireza Ahmadi, and Christopher Steven McCool from University of Bonn. Read more: https://events.infovaya.com/presentation?id=86839 More robot videos from IROS: https://spectrum.ieee.org/robot-videos-iros-award-winners #ieee #engineering #robotics

[ECCV 2022 Oral] Adaptive Token Sampling for Efficient Vision Transformers

This is the 5-minute video for our ECCV 2022 paper: “Adaptive Token Sampling for Efficient Vision Transformers” Project Page: https://adaptivetokensampling.github.io/ Paper: https://arxiv.org/pdf/2111.15667.pdf Poster: https://github.com/adaptivetokensampling/adaptivetokensampling.github.io/raw/main/assets/ATS_ECCV_Poster.pdf Code: https://github.com/adaptivetokensampling/ATS Authors: Mohsen Fayyaz*, Soroush Abbasi Koohpayegani*, Farnoush Rezaei-Jafari*, Sunando Sengupta, Hamid-Reza Vaezi-Joze, Eric Sommerlade, Hamed Pirsiavash, Juergen Gall, (* equal contributions). Microsoft, UC Davis, Technical University of Berlin, BIFOLD, University of Bonn Abstract: While state-of-the-art vision transformer models achieve promising results in image classification, they are computationally expensive and require many GFLOPs. Although the GFLOPs of a vision transformer can be decreased by reducing the number of tokens in the network, there is no setting that is optimal for all input images. In this work, we, therefore, introduce a differentiable parameter-free Adaptive Token Sampler (ATS) module, which can be plugged into any existing vision transformer architecture. ATS empowers vision transformers by scoring and adaptively sampling significant tokens. As a result, the number of tokens is not constant anymore and varies for each input image. By integrating ATS as an additional layer within the current transformer blocks, we can convert them into much more efficient vision transformers with an adaptive number of tokens. Since ATS is a parameter-free module, it can be added to the off-the-shelf pre-trained vision transformers as a plug-and-play module, thus reducing their GFLOPs without any additional training. Moreover, due to its differentiable design, one can also train a vision transformer equipped with ATS. We evaluate the efficiency of our module in both image and video classification tasks by adding it to multiple SOTA vision transformers. Our proposed module improves the SOTA by reducing their computational costs (GFLOPs) by 2 times, while preserving their accuracy on the ImageNet, Kinetics-400, and Kinetics-600 datasets.

Djamei: Many ways to TOPLESS– manipulation of plant auxin signaling by a cluster of fungal effectors

Prof. Dr. Armin Djamei is Professor of Plant Pathology, Institute of Crop Science and Resource Conservation (INRES) at the University of Bonn. Bindics, J., Khan, M., Uhse, S., Kogelmann, B., Baggely, L., Reumann, D., Ingole, K.D., Stirnberg, A., Rybecky, A., Darino, M., Navarrete, F., Doehlemann, G. and Djamei, A. (2022), Many ways to TOPLESS – manipulation of plant auxin signalling by a cluster of fungal effectors. New Phytologist. https://doi.org/10.1111/nph.18315

Farmers’ acceptance of results-based agri-environmental schemes: A German perspective

This PhenoRob paper trailer is based on the following publication: A. Massfeller, M. Meraner, S. Huettel, and R. Uehleke, “Farmers’ acceptance of results-based agri-environmental schemes: A German perspective,” Land Use Policy, vol. 120, 2022. doi:10.1016/j.landusepol.2022.106281

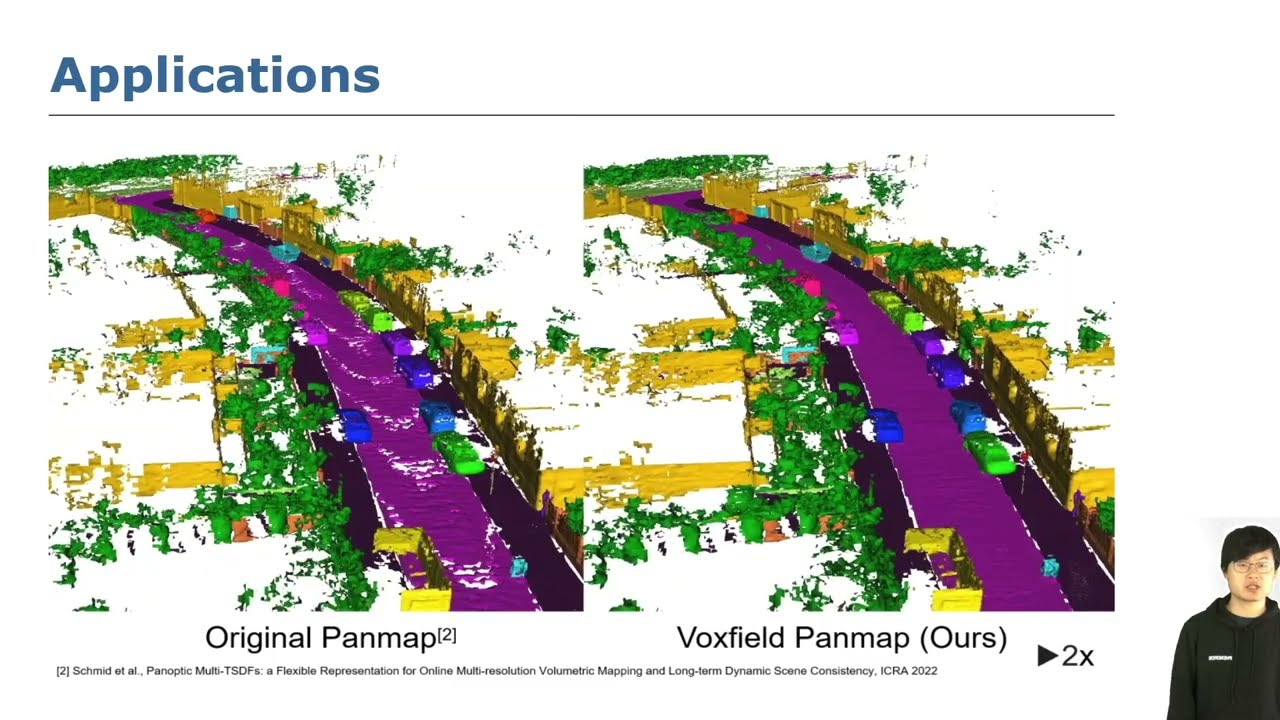

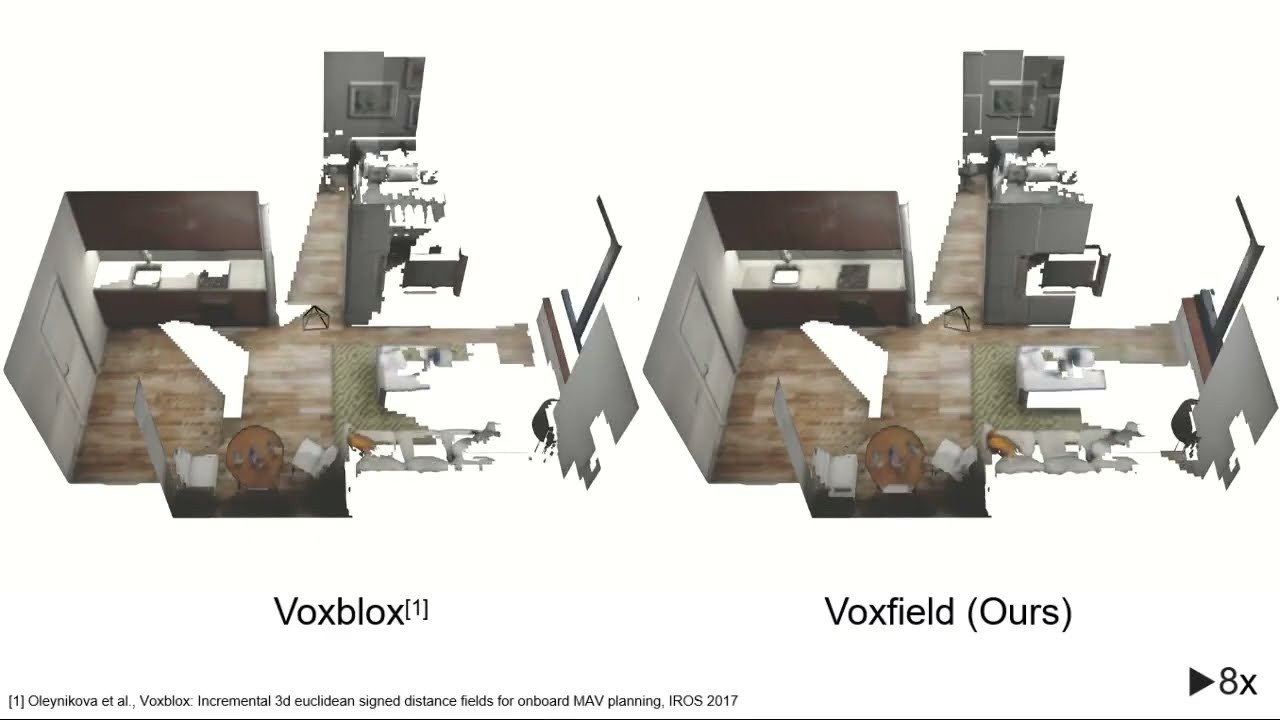

IROS 2022- Voxfield: Non-Projective Signed Distance Fields for Online Planning and 3D Reconstruction

Video of the presentation at IROS 2022 of the paper “Voxfield: Non-Projective Signed Distance Fields for Online Planning and 3D Reconstruction” by Yue Pan, Yves Kompis, Luca Bartolomei, Ruben Mascaro, Cyrill Stachniss and Margarita Chli Paper Link – https://www.research-collection.ethz.ch/handle/20.500.11850/560719 Code Link – https://github.com/VIS4ROB-lab/voxfield Abstract – Creating accurate maps of complex, unknown environments is of utmost importance for truly autonomous navigation robot. However, building these maps online is far from trivial, especially when dealing with large amounts of raw sensor readings on a computation and energy constrained mobile system, such as a small drone. While numerous approaches tackling this problem have emerged in recent years, the mapping accuracy is often sacrificed as systematic approximation errors are tolerated for efficiency’s sake. Motivated by these challenges, we propose Voxfield, a mapping framework that can generate maps online with higher accuracy and lower computational burden than the state of the art. Built upon the novel formulation of non-projective truncated signed distance fields (TSDFs), our approach produces more accurate and complete maps, suitable for surface reconstruction. Additionally, it enables efficient generation of Euclidean signed distance fields (ESDFs), useful e.g., for path planning, that does not suffer from typical approximation errors. Through a series of experiments with public datasets, both real-world and synthetic, we demonstrate that our method beats the state of the art in map coverage, accuracy and computational time. Moreover, we show that Voxfield can be utilized as a back-end in recent multi-resolution mapping frameworks, producing high quality maps even in large-scale experiments. Finally, we validate our method by running it onboard a quadrotor, showing it can generate accurate ESDF maps usable for real-time path planning and obstacle avoidance.

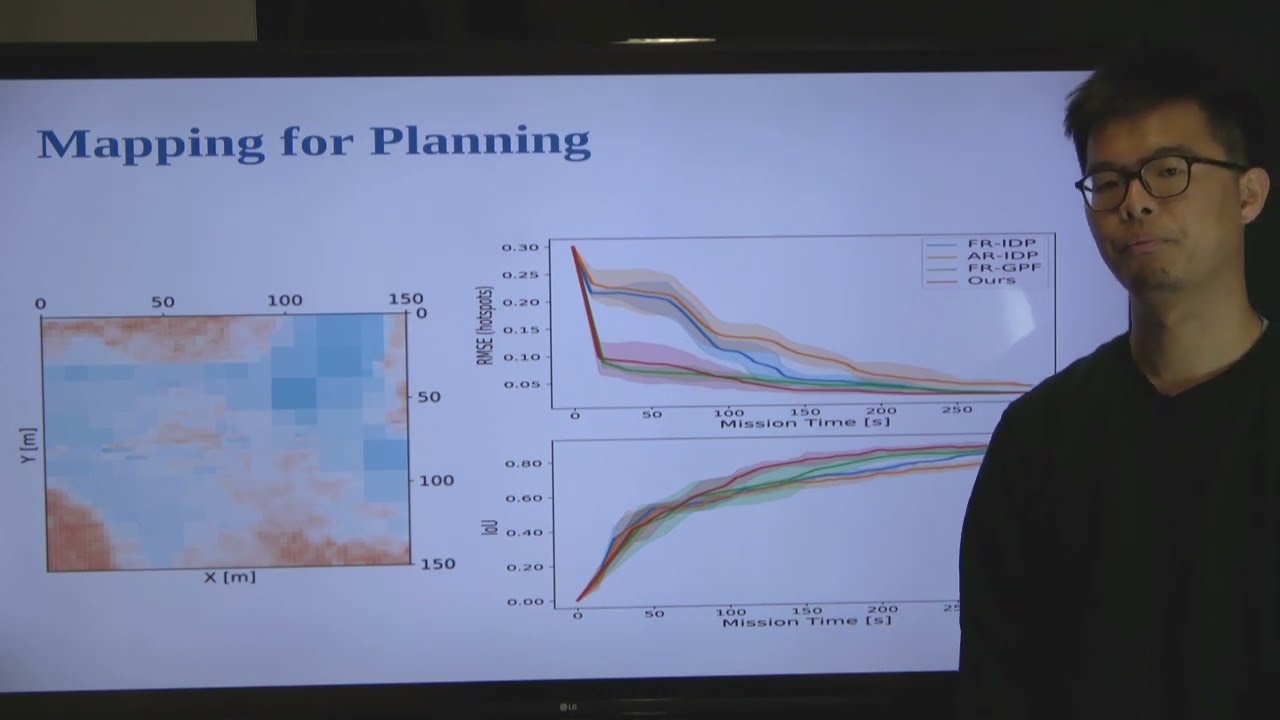

Adaptive-Resolution Field Mapping Using Gaussian Process Fusion With Integral Kernels by L.Jin et al

This short paper trailer is based on the following publication: L. Jin, J. Rückin, S. H. Kiss, T. Vidal-Calleja, and M. Popović, “Adaptive-Resolution Field Mapping Using Gaussian Process Fusion With Integral Kernels,” IEEE Robotics and Automation Letters, vol. 7, pp. 7471-7478, 2022. doi:10.1109/LRA.2022.3183797

RAL-IROS22: Adaptive-Resolution Field Mapping Using GP Fusion with Integral Kernels, Jin et al.

Jin, L., Rückin J., Kiss, S. H., and Vidal-Calleja, T., and Popović, M., “Adaptive-Resolution Field Mapping Using GP Fusion with Integral Kernels,” IEEE Robotics and Automation Letters (RA-L), vol.7, pp.7471-7478, 2022. doi: 10.1109/LRA.2022.3183797 PDF: https://arxiv.org/abs/2109.14257

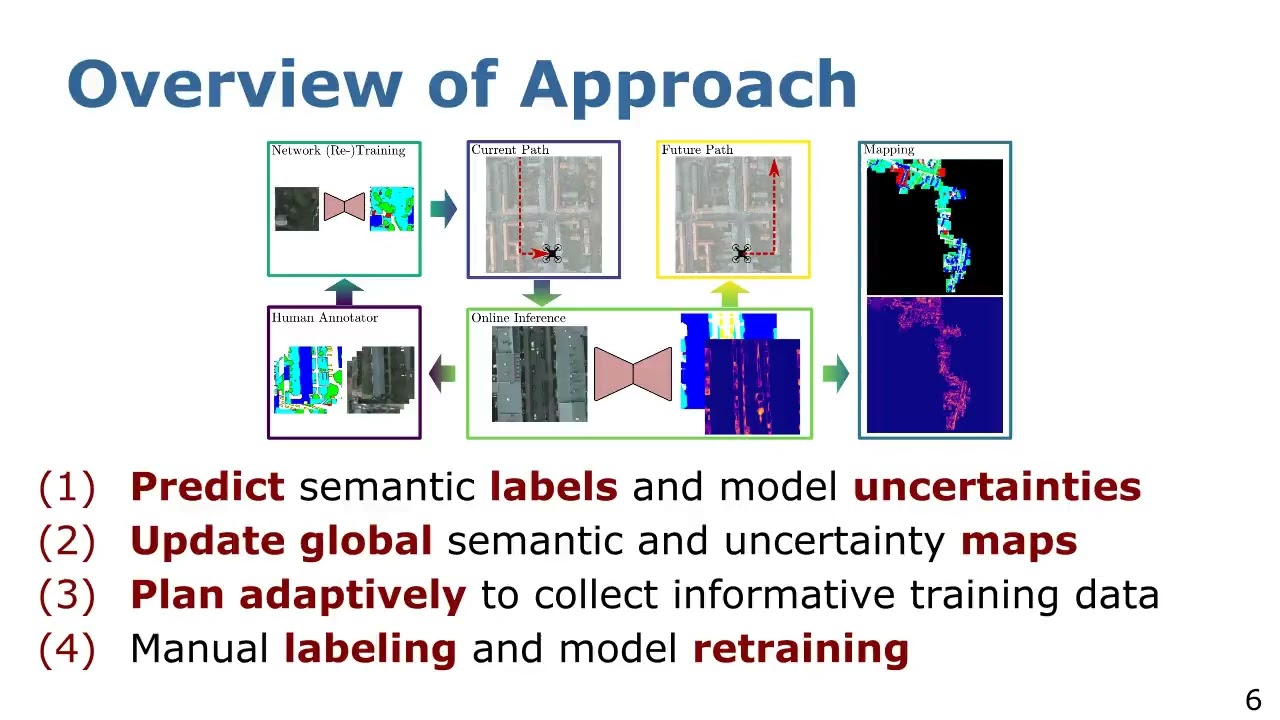

IROS22: Informative Path Planning for Active Learning in Aerial Semantic Mapping

Rückin J., Jin, L., Magistri, F., Stachniss, C., and Popović, M., “Informative Path Planning for Active Learning in Aerial Semantic Mapping,” in Proc. of the IEEE/RSJ Int. Conf. on Robotics and Intelligent Systems (IROS), 2022. PDF: https://arxiv.org/abs/2203.01652 Code: https://github.com/dmar-bonn/ipp-al

Talk by J. Rückin: Informative Path Planning for Active Learning in Aerial Semantic Map… (IROS’22)

IROS 2020 talk by Julius Rückin about the paper J. Rückin, L. Jin, F. Magistri, C. Stachniss, and M. Popović, “Informative Path Planning for Active Learning in Aerial Semantic Mapping,” in Proc. of the IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), 2022. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/rueckin2022iros.pdf

ICRA22: Adaptive Informative Path Planning Using Deep RL for UAV-based Active Sensing, Rückin et al.

Rückin J., Jin, L., and Popović, M., “Adaptive Informative Path Planning Using Deep RL for UAV-based Active Sensing,” in Proc. of the IEEE Intl. Conf. on Robotics & Automation (ICRA), 2022. doi: ICRA46639.2022.9812025 PDF: https://arxiv.org/abs/2109.13570 Code: https://github.com/dmar-bonn/ipp-rl

Talk by F. Magistri: 3D Shape Completion and Reconstruction for Agricultural Robots (RAL-IROS’22)

IROS 2020 Talk by Federico Magistri on F. Magistri, E. Marks, S. Nagulavancha, I. Vizzo, T. Läbe, J. Behley, M. Halstead, C. McCool, and C. Stachniss, “Contrastive 3D Shape Completion and Reconstruction for Agricultural Robots using RGB-D Frames,” IEEE Robotics and Automation Letters (RA-L), vol. 7, iss. 4, pp. 10120-10127, 2022. Paper: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/magistri2022ral-iros.pdf

Talk by Y. Pan: Voxfield: Non-Projective Signed Distance Fields (IROS’22)

IROS 2022 Talk by Yue Pan about the paper: Y. Pan, Y. Kompis, L. Bartolomei, R. Mascaro, C. Stachniss, and M. Chli, “Voxfield: Non-Projective Signed Distance Fields for Online Planning and 3D Reconstruction,” in Proc. of the IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), 2022. Paper: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/pan2022iros.pdf Code: https://github.com/VIS4ROB-lab/voxfield

Talk by Ignacio Vizzo: Make it Dense – Dense Maps from Sparse Point Clouds (RAL-IROS’22)

IROS 2022 Talk by Ignacio Vizzo: “Make it Dense: Self-Supervised Geometric Scan Completion of Sparse 3D LiDAR Scans in Large Outdoor Environments” I. Vizzo, B. Mersch, R. Marcuzzi, L. Wiesmann, J. and Behley, and C. Stachniss, “Make it Dense: Self-Supervised Geometric Scan Completion of Sparse 3D LiDAR Scans in Large Outdoor Environments,” IEEE Robotics and Automation Letters (RA-L), vol. 7, iss. 3, pp. 8534-8541, 2022. doi:10.1109/LRA.2022.3187255 Paper: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/vizzo2022ral-iros.pdf Code: https://github.com/PRBonn/make_it_dense

Talk by L. Wiesmann: DCPCR – Deep Compressed Point Cloud Registration in Large Env… (RAL-IROS’22)

IROS’2022 Talk by Louis Wiesmann about the RAL-IROS’2022 paper: L. Wiesmann, T. Guadagnino, I. Vizzo, G. Grisetti, J. Behley, and C. Stachniss, “DCPCR: Deep Compressed Point Cloud Registration in Large-Scale Outdoor Environments,” IEEE Robotics and Automation Letters (RA-L), vol. 7, iss. 3, pp. 6327-6334, 2022. doi:10.1109/LRA.2022.3171068 Paper: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/wiesmann2022ral-iros.pdf Code: https://github.com/PRBonn/DCPCR

Sugar Beet Shoot and Root Phenotypic Plasticity by S. Hadir et al.

This short paper trailer video is based on the following publication: S. Hadir, T. Gaiser, H. Hüging, M. Athmann, D. Pfarr, R. Kemper, F. Ewert, and S. Seidel, “Sugar Beet Shoot and Root Phenotypic Plasticity to Nitrogen, Phosphorus, Potassium and Lime Omission,” Agriculture, vol. 11, iss. 1, 2021. Find more info here: https://www.mdpi.com/2077-0472/11/1/21

Voxfield: Non-Projective Signed Distance Fieldsfor Online Planning and 3D Reconstruction

Video of the paper “Voxfield: Non-Projective Signed Distance Fieldsfor Online Planning and 3D Reconstruction”, by Matthias Hüppi, Luca Bartolomei, Ruben Mascaro and Margarita Chli, IROS 2022. Abstract – Creating accurate maps of complex, unknown environments is of utmost importance for truly autonomous navigation robot. However, building these maps online is far from trivial, especially when dealing with large amounts of raw sensor readings on a computation and energy constrained mobile system, such as a small drone. While numerous approaches tackling this problem have emerged in recent years, the mapping accuracy is often sacrificed as systematic approximation errors are tolerated for efficiency’s sake. Motivated by these challenges, we propose Voxfield, a mapping framework that can generate maps online with higher accuracy and lower computational burden than the state of the art. Built upon the novel formulation of non-projective truncated signed distance fields (TSDFs), our approach produces more accurate and complete maps, suitable for surface reconstruction. Additionally, it enables efficient generation of Euclidean signed distance fields (ESDFs), useful e.g., for path planning, that does not suffer from typical approximation errors. Through a series of experiments with public datasets, both real-world and synthetic, we demonstrate that our method beats the state of the art in map coverage, accuracy and computational time. Moreover, we show that Voxfield can be utilized as a back-end in recent multi-resolution mapping frameworks, producing high quality maps even in large-scale experiments. Finally, we validate our method by running it onboard a quadrotor, showing it can generate accurate ESDF maps usable for real-time path planning and obstacle avoidance. Paper – https://www.research-collection.ethz.ch/handle/20.500.11850/560719 Code – https://github.com/VIS4ROB-lab/voxfield

Shortcut Hulls: Vertex-restricted Outer Simplifications of Polygons by A. Bonerath et al.

This short paper trailer video is based on the following publication: A. Bonerath, J. Haunert, J. S. B. Mitchell, and B. Niedermann, “Shortcut Hulls: Vertex-restricted Outer Simplifications of Polygons,” in Proceedings of the 33rd Canadian Conference on Computational Geometry , 2021, pp. 12-23.

LatticeNet: fast spatio-temporal point cloud segmentation using permutohedral lattices (Rosu et al.)

This short paper trailer video is based on the following publication: R. A. Rosu, P. Schütt, J. Quenzel, and S. Behnke, “LatticeNet: fast spatio-temporal point cloud segmentation using permutohedral lattices,” Autonomous Robots, p. 1-16, 2021.

Cercospora leaf spot modeling in sugar beet by Ispizua, Barreto, Günder, Bauckhage & Mahlein

This short paper trailer video is based on the following publication: F. R. Ispizua Yamati, A. Barreto, A. Günder, C. Bauckhage, and A. -K. Mahlein, “Sensing the occurrence and dynamics of Cercospora leaf spot disease using UAV-supported image data and deep learning,” Sugar Industry, vol. 147, iss. 2, pp. 79-86, 2022. Find more info here: https://sugarindustry.info/paper/28345/ https://www.researchgate.net/publication/358243320_Sensing_the_occurrence_and_dynamics_of_Cercospora_leaf_spot_disease_using_UAV-supported_image_data_and_deep_learning

ICRA’22: Retriever: Point Cloud Retrieval in Compressed 3D Maps by Wiesmann et al.

L. Wiesmann, R. Marcuzzi, C. Stachniss, and J. Behley, “Retriever: Point Cloud Retrieval in Compressed 3D Maps,” in Proc.~of the IEEE Intl.~Conf.~on Robotics & Automation (ICRA), 2022. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/wiesmann2022icra.pdf #UniBonn #StachnissLab #robotics

RAL-ICRA’22: Joint Plant and Leaf Instance Segmentation on Field-Scale UAV Imagery by Weyler et al.

J. Weyler, J. Quakernack, P. Lottes, J. Behley, and C. Stachniss, “Joint Plant and Leaf Instance Segmentation on Field-Scale UAV Imagery,” IEEE Robotics and Automation Letters (RA-L), vol. 7, iss. 2, pp. 3787-3794, 2022. doi:10.1109/LRA.2022.3147462 PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/weyler2022ral.pdf #UniBonn #StachnissLab #robotics

WACV’22: In-Field Phenotyping Based on Crop Leaf and Plant Instance Segmentation by Weyler et al.

J. Weyler, F. and Magistri, P. Seitz, J. Behley, and C. Stachniss, “In-Field Phenotyping Based on Crop Leaf and Plant Instance Segmentation,” in Proc. of the Winter Conf. on Applications of Computer Vision (WACV), 2022. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/weyler2022wacv.pdf #UniBonn #StachnissLab #robotics

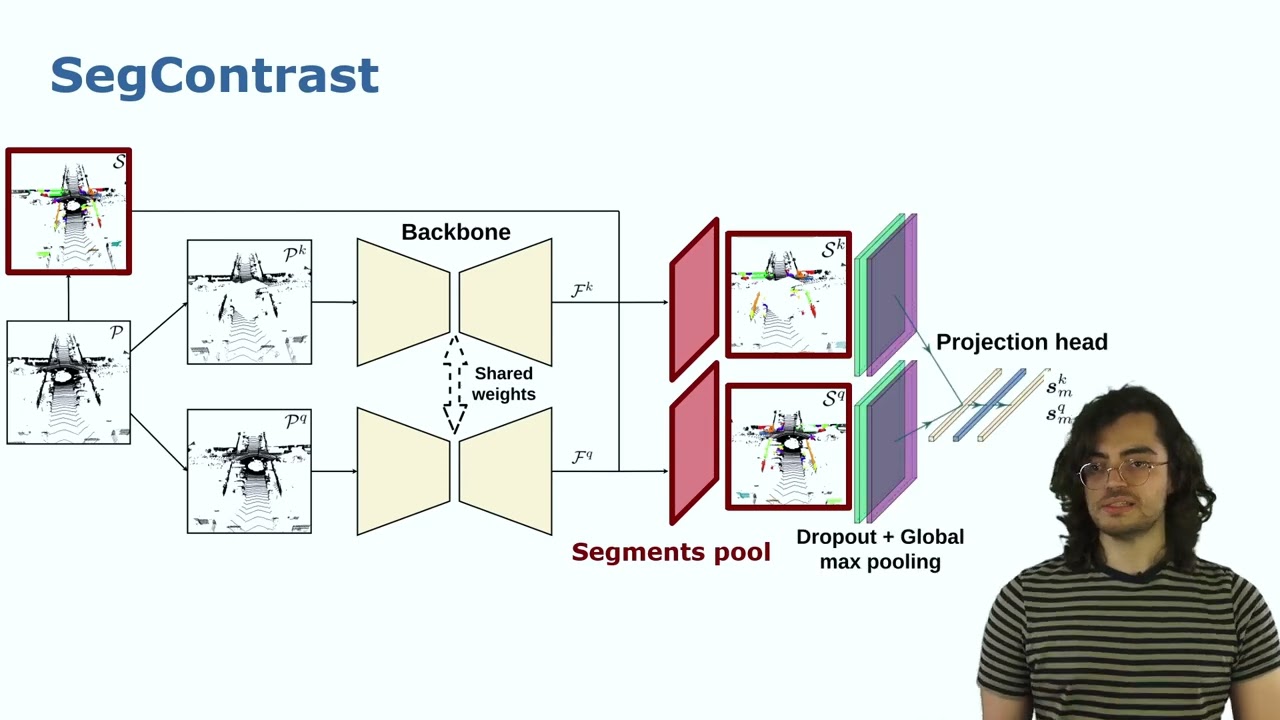

RAL-ICRA’22: SegContrast: 3D Point Cloud Feature Representation Learning … by Nunes et al.

L. Nunes, R. Marcuzzi, X. Chen, J. Behley, and C. Stachniss, “SegContrast: 3D Point Cloud Feature Representation Learning through Self-supervised Segment Discrimination,” IEEE Robotics and Automation Letters (RA-L), vol. 7, iss. 2, pp. 2116-2123, 2022. doi:10.1109/LRA.2022.3142440 PDF: http://www.ipb.uni-bonn.de/pdfs/nunes2022ral-icra.pdf CODE: https://github.com/PRBonn/segcontrast #UniBonn #StachnissLab #robotics

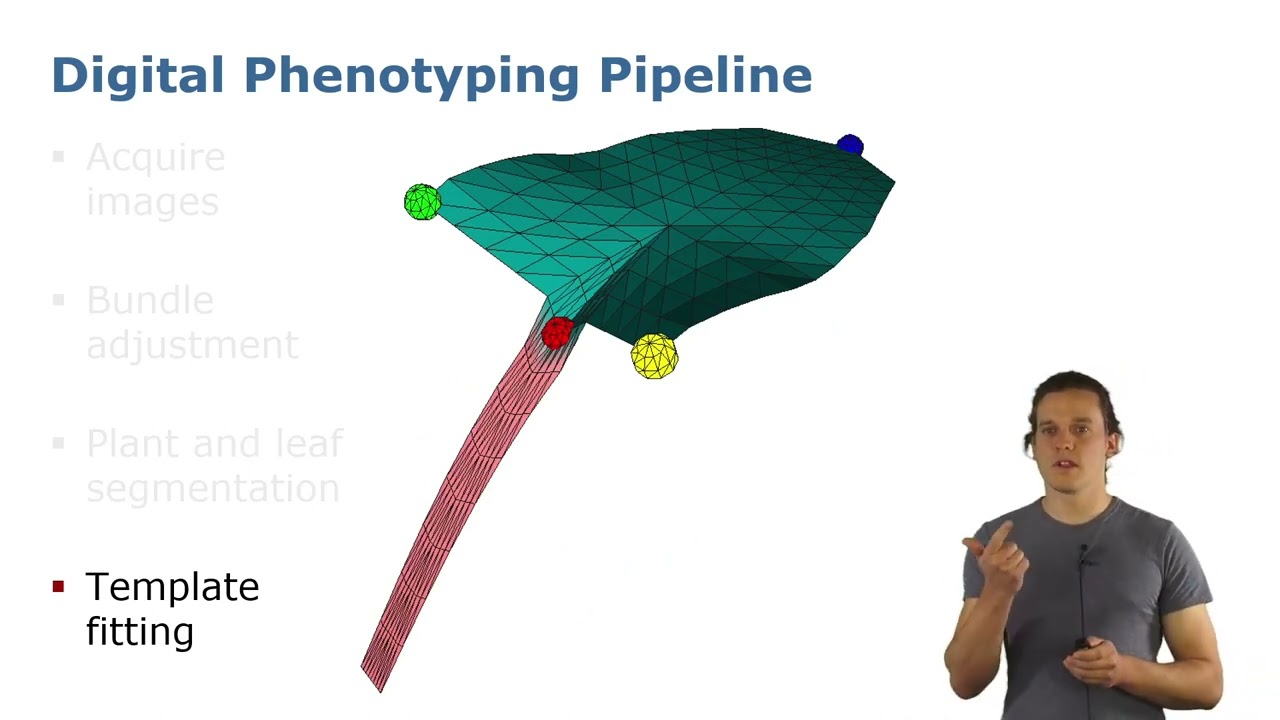

ICRA’22: Precise 3D Reconstruction of Plants from UAV Imagery … by Marks et al.

E. Marks, F. Magistri, and C. Stachniss, “Precise 3D Reconstruction of Plants from UAV Imagery Combining Bundle Adjustment and Template Matching,” in Proc.~of the IEEE Intl.~Conf.~on Robotics & Automation (ICRA), 2022. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/marks2022icra.pdf #UniBonn #StachnissLab #robotics

RAL-ICRA’22: Contrastive Instance Association for 4D Panoptic Segmentation… by Marcuzzi et al.

R. Marcuzzi, L. Nunes, L. Wiesmann, I. Vizzo, J. Behley, and C. Stachniss, “Contrastive Instance Association for 4D Panoptic Segmentation using Sequences of 3D LiDAR Scans,” IEEE Robotics and Automation Letters (RA-L), vol. 7, iss. 2, pp. 1550-1557, 2022. doi:10.1109/LRA.2022.3140439 PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/marcuzzi2022ral.pdf #UniBonn #StachnissLab #robotics

Jana Kierdorf – GrowliFlower: A time series dataset for GROWth analysis of cauLIFLOWER

International Conference on Digital Technologies for Sustainable Crop Production (DIGICROP 2022) • March 28-30, 2022 • http://digicrop.de/

Towards Autonomous Visual Navigation in Arable Fields

Rou pare can be found in Arxiv at: [Towards Autonomous Crop-Agnostic Visual Navigation in Arable Fields](https://arxiv.org/abs/2109.11936) You can find the implementation in : [visual-multi-crop-row-navigation](https://github.com/Agricultural-Robotics-Bonn/visual-multi-crop-row-navigation) more detail about our project BonnBot-I and Phenorob at: https://www.phenorob.de/ http://agrobotics.uni-bonn.de/

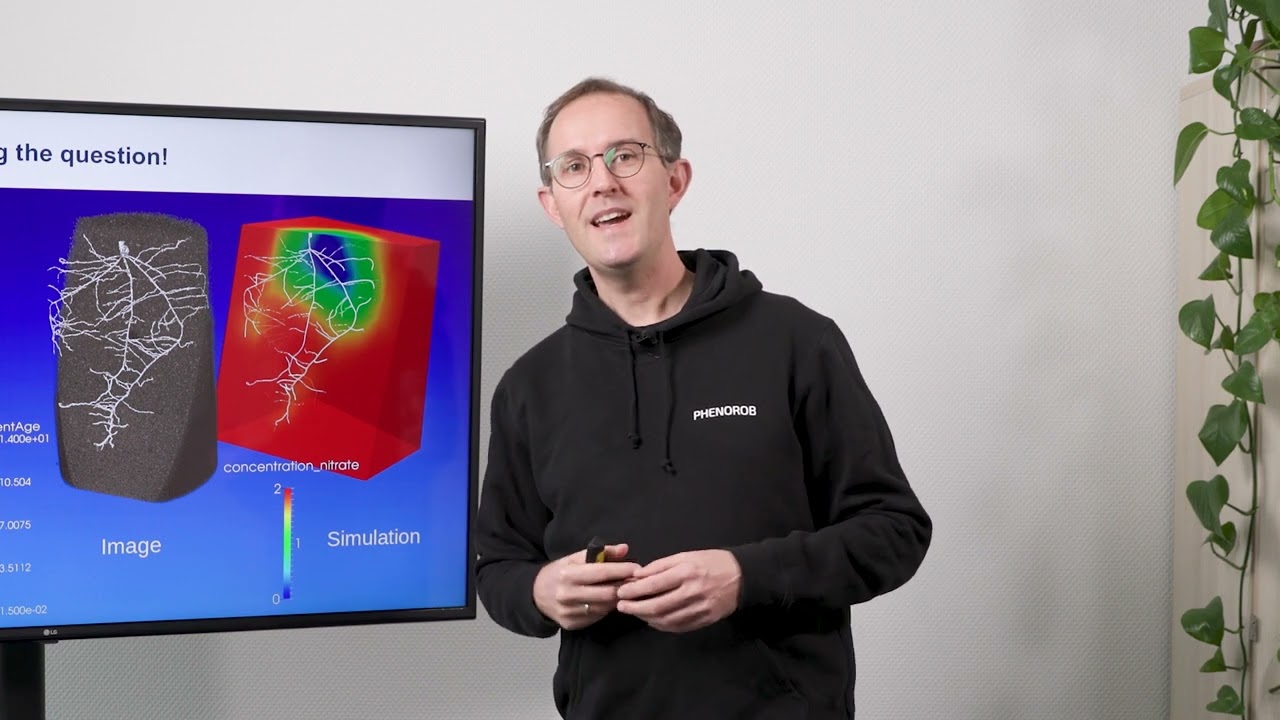

Johannes Postma: Crop Improvement From Phenotyping Roots – Highlights Reveal Expanding Opportunities

Johannes A. Postma is a PhenoRob Member and Researcher at the Institute of Bio- and Geosciences (IBG-2), Forschungszentrum Jülich Saoirse R. Tracy, Kerstin A. Nagel, Johannes A. Postma, Heike Fassbender, Anton Wasson, Michelle Watt (2020), Crop Improvement from Phenotyping Roots: Highlights Reveal Expanding Opportunities, Trends in Plant Science, Volume 25, Issue 1, Pages 105-118 https://doi.org/10.1016/j.tplants.2019.10.015

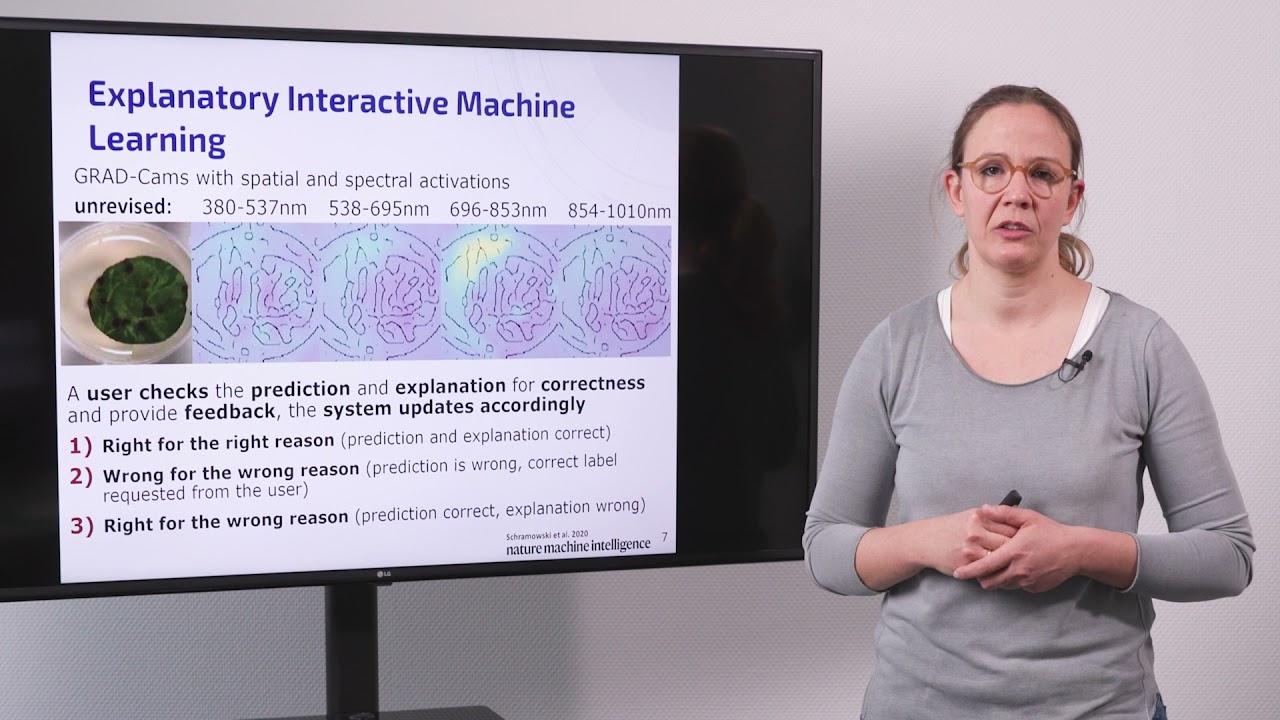

A.-K. Mahlein: Making Deep Neural Networks Right for the Right Scientific Reason…

Prof. Dr. Anne-Katrin Mahlein is Principal Investigator at PhenoRob and Director of the Institute of Sugarbeet Research (IfZ) at the University of Göttingen. Making deep neural networks right for the right scientific reasons by interacting with their explanations. Schramowski, P., Stammer, W., Teso, S. et al. Nat Mach Intell 2, 476–486 (2020). https://doi.org/10.1038/s42256-020-0212-3

Lasse Klingbeil: Pheno4D: A spatio-temporal dataset of maize and tomato plant point clouds…

Dr. Lasse Klingbeil is Postdoc at the Institute of Geodesy and Geoinformation (IGG), University of Bonn and PhenoRob Member. Pheno4D: A spatio-temporal dataset of maize and tomato plant point clouds for phenotyping and advanced plant analysis D. Schunck, F. Magistri, R. A. Rosu, A. Cornelißen, N. Chebrolu, S. Paulus, J. Léon, S. Behnke, C. Stachniss, H. Kuhlmann, and L. Klingbeil PLOS ONE, vol. 16, iss. 8, pp. 1-18, 2021 Paper: https://doi.org/10.1371/journal.pone.0256340 Data: https://www.ipb.uni-bonn.de/data/pheno4d/

Uwe Rascher: Measuring and understanding the dynamics of plant photosynthesis across scales…

Measuring and understanding the dynamics of plant photosynthesis across scales – from single plants to satellites Prof. Dr. Uwe Rascher is Principal Investigator at PhenoRob and Professor of Quantitative Physiology of Crops, Institute of Bio- and Geosciences (IBG-2), Forschungszentrum Jülich and Institute of Crop Science and Resource Conservation (INRES), University of Bonn Rascher et. al. (2015) Sun-induced fluorescence – a new probe of photosynthesis: First maps from the imaging spectrometer HyPlant Global Change Biology, 21, 4673-4684 https://doi.org/10.1111/gcb.13017 Siegmann et. al. (2019) The High-Performance Airborne Imaging Spectrometer HyPlant—From Raw Images to Top-of-Canopy Reflectance and Fluorescence Products: Introduction of an Automatized Processing Chain Remote Sensing, 11, article no. 2760 https://doi.org/10.3390/rs11232760

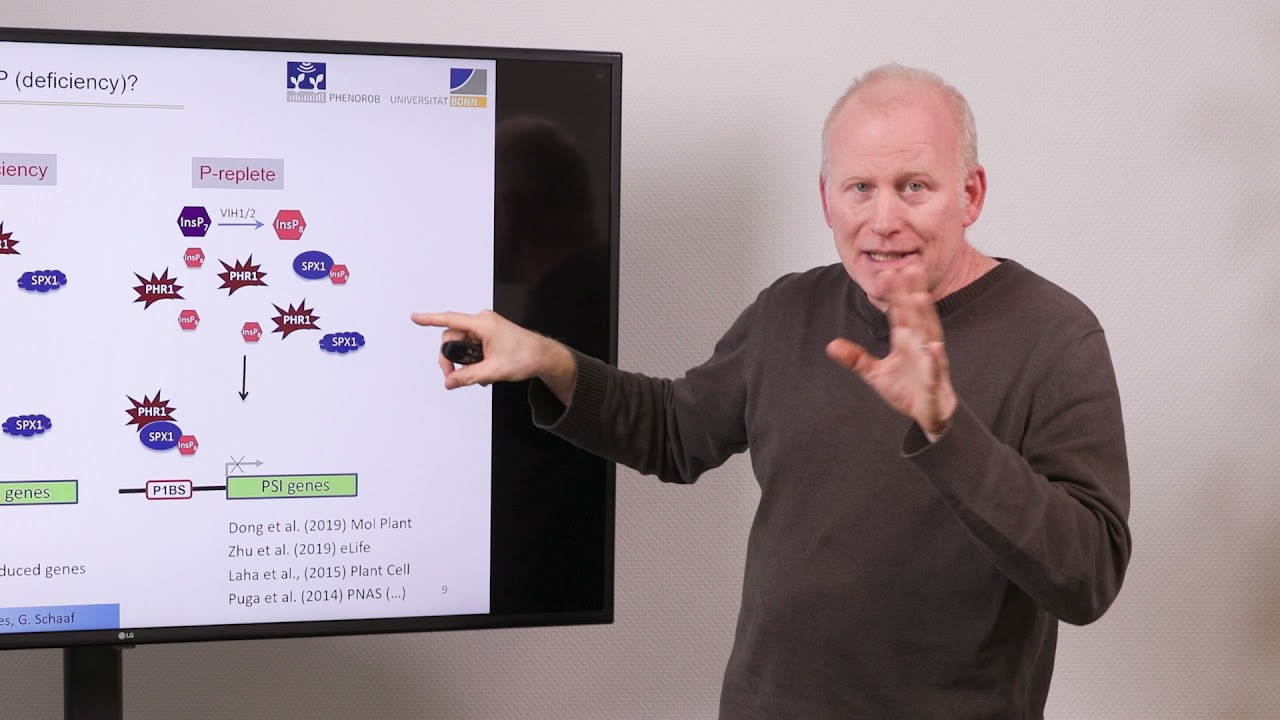

G. Schaaf: ITPK1 is an InsP6/ADP phosphotransferase that controls phosphate signaling in Arabidopsis

Prof. Dr. Gabriel Schaaf is Principal Investigator at PhenoRob and Professor and head of the Ecophysiology of Plant Nutrition Group, Institute of Crop Science and Resource Conservation (INRES), University of Bonn. ITPK1 is an InsP6/ADP phosphotransferase that controls phosphate signaling in Arabidopsis EstherRiemer, Danye Qiu, Debabrata Laha, Robert K.Harmel, Philipp Gaugler, Verena Gaugler, Michael Frei, Mohammad-Reza Hajirezaei, Nargis Parvin Laha, Lukas Krusenbaum, Robin Schneider, Adolfo Saiardi, Dorothea Fiedler, Henning J. Jessen, Gabriel Schaaf, Ricardo F. H. Giehl Molecular Plant Volume 14, Issue 11, 1 November 2021, Pages 1864-1880 https://doi.org/10.1016/j.molp.2021.07.011

Crop and Weed Counting and Tracking

Example video from Crop Agnostic Monitoring Driven by Deep Learning available here: https://www.frontiersin.org/articles/10.3389/fpls.2021.786702/full?&utm_source=Email_to_authors_&utm_medium=Email&utm_content=T1_11.5e1_author&utm_campaign=Email_publication&field=&journalName=Frontiers_in_Plant_Science&id=786702 The different colours for the bounding boxes represent the species of plant. The outer box is the final prediction over the whole tracklet (most consistent class that is predicted) and the inner box is the class (species) for the current frame. In this example we have 8 classes of plants. The numbers for each plant are the visual surface area of the plant taken by using a stereo camera and the instance-based semantic segmentation. Citation: Halstead M, Ahmadi A, Smitt C, Schmittmann O and McCool C (2021) Crop Agnostic Monitoring Driven by Deep Learning. Front. Plant Sci. 12:786702. doi: 10.3389/fpls.2021.786702

Talk by B. Mersch: Self-supervised Point Cloud Prediction Using 3D Spatio-temporal CNNs

B. Mersch, X. Chen, J. Behley, and C. Stachniss, “Self-supervised Point Cloud Prediction Using 3D Spatio-temporal Convolutional Networks,” in Proc. of the Conf. on Robot Learning (CoRL), 2021. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/mersch2021corl.pdf Code: https://github.com/PRBonn/point-cloud-prediction #UniBonn #StachnissLab #robotics #autonomouscars

Virtual Temporal Samples for RNNs: applied to semantic segmentation in agriculture

Normally, to train a recurrent neural network (RNN), labeled samples from a video (temporal) sequence are required which is laborious and has stymied work in this direction. By generating virtual temporal samples, we demonstrate that it is possible to train a lightweight RNN to perform semantic segmentation on two challenging agricultural datasets. full text in arxiv: https://arxiv.org/abs/2106.10118 check My GitHub for interesting ROS-based projects: https://github.com/alirezaahmadi

Talk by X. Chen: Moving Object Segmentation in 3D LiDAR Data: A Learning Approach (IROS & RAL’21)

X. Chen, S. Li, B. Mersch, L. Wiesmann, J. Gall, J. Behley, and C. Stachniss, “Moving Object Segmentation in 3D LiDAR Data: A Learning-based Approach Exploiting Sequential Data,” IEEE Robotics and Automation Letters (RA-L), vol. 6, pp. 6529-6536, 2021. doi:10.1109/LRA.2021.3093567 #UniBonn #StachnissLab #robotics #autonomouscars #neuralnetworks #talk

Talk by B. Mersch: Maneuver-based Trajectory Prediction for Self-driving Cars Using … (IROS’21)

B. Mersch, T. Höllen, K. Zhao, C. Stachniss, and R. Roscher, “Maneuver-based Trajectory Prediction for Self-driving Cars Using Spatio-temporal Convolutional Networks,” in Proceedings of the IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), 2021. Paper: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/mersch2021iros.pdf #UniBonn #StachnissLab #robotics #autonomouscars #neuralnetworks #talk

Decision Snippet Features

We show that we can compress random forest models while maintaining their predictive performance by finding Decision Snippet Features. In our presentation at the IEEE International Conference on Pattern Recognition 2021, we show what Decision Snippets are, how you can find them, and which benefits you can expect from Decision Snippets.

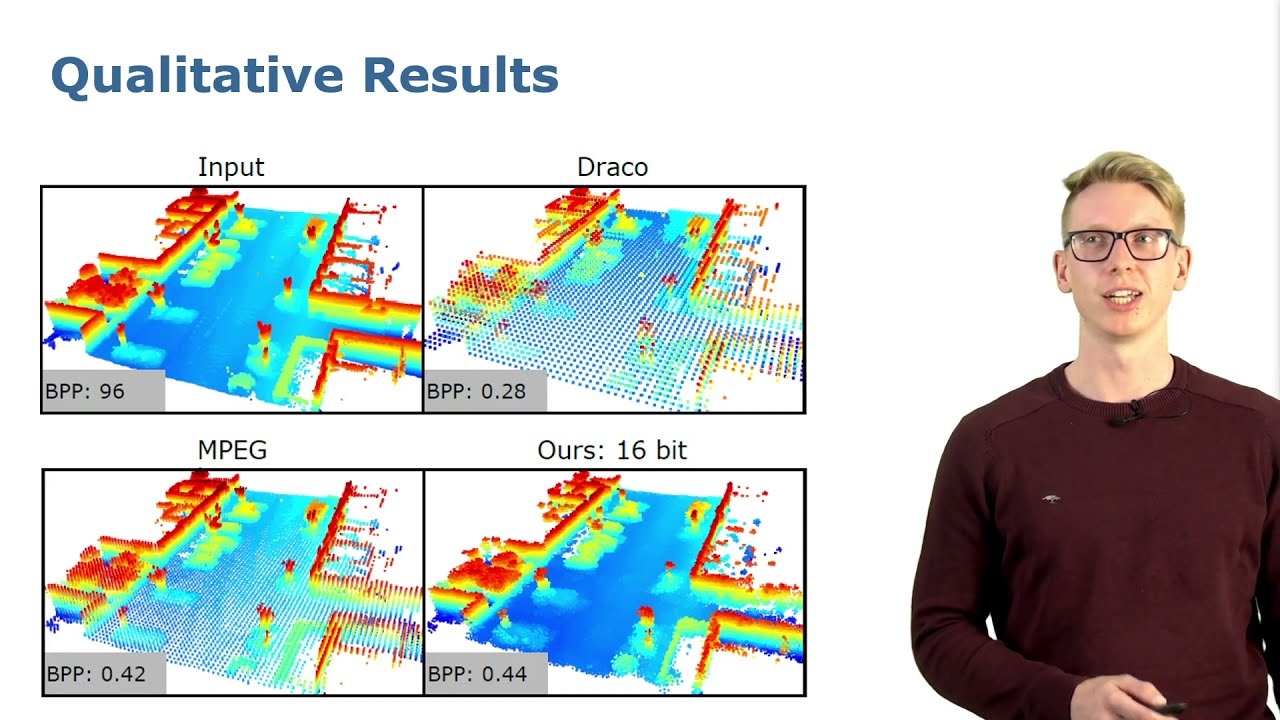

Talk by L. Wiesmann: Deep Compression for Dense Point Cloud Maps (RAL-ICRA 2021)

L. Wiesmann, A. Milioto, X. Chen, C. Stachniss, and J. Behley, “Deep Compression for Dense Point Cloud Maps,” IEEE Robotics and Automation Letters (RA-L), vol. 6, pp. 2060-2067, 2021. doi:10.1109/LRA.2021.3059633 Code: https://github.com/PRBonn/deep-point-map-compression Paper: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/wiesmann2021ral.pdf #UniBonn #StachnissLab #robotics #autonomouscars #neuralnetworks #talk

Talk by F. Magistri: Phenotyping Exploiting Differentiable Rendering with Self-Consistency (ICRA’21)

F. Magistri, N. Chebrolu, J. Behley, and C. Stachniss, “Towards In-Field Phenotyping Exploiting Differentiable Rendering with Self-Consistency Loss,” in Proceedings of the IEEE Int. Conf. on Robotics & Automation (ICRA), 2021. Paper: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/magistri2021icra.pdf #UniBonn #StachnissLab #robotics #PhenoRob #neuralnetworks #talk

ICRA’21: Phenotyping Exploiting Differentiable Rendering with Consistency Loss by Magistri et al.

F. Magistri, N. Chebrolu, J. Behley, and C. Stachniss, “Towards In-Field Phenotyping Exploiting Differentiable Rendering with Self-Consistency Loss,” in Proceedings of the IEEE Int. Conf. on Robotics & Automation (ICRA), 2021. Paper: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/magistri2021icra.pdf #UniBonn #StachnissLab #robotics #PhenoRob #neuralnetworks #talk

Talk by I. Vizzo: Poisson Surface Reconstruction for LiDAR Odometry and Mapping (ICRA’21)

I. Vizzo, X. Chen, N. Chebrolu, J. Behley, and C. Stachniss, “Poisson Surface Reconstruction for LiDAR Odometry and Mapping,” in Proceedings of the IEEE Int. Conf. on Robotics & Automation (ICRA), 2021. Paper: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/vizzo2021icra.pdf Code: https://github.com/PRBonn/puma #UniBonn #StachnissLab #robotics #autonomouscars #slam #talk

Talk by J. Weyler: Joint Plant Instance Detection and Leaf Count Estimation … (RAL+ICRA’21)

J. Weyler, A. Milioto, T. Falck, J. Behley, and C. Stachniss, “Joint Plant Instance Detection and Leaf Count Estimation for In-Field Plant Phenotyping,” IEEE Robotics and Automation Letters (RA-L), vol. 6, pp. 3599-3606, 2021. doi:10.1109/LRA.2021.3060712 #UniBonn #StachnissLab #robotics #PhenoRob #neuralnetworks #talk

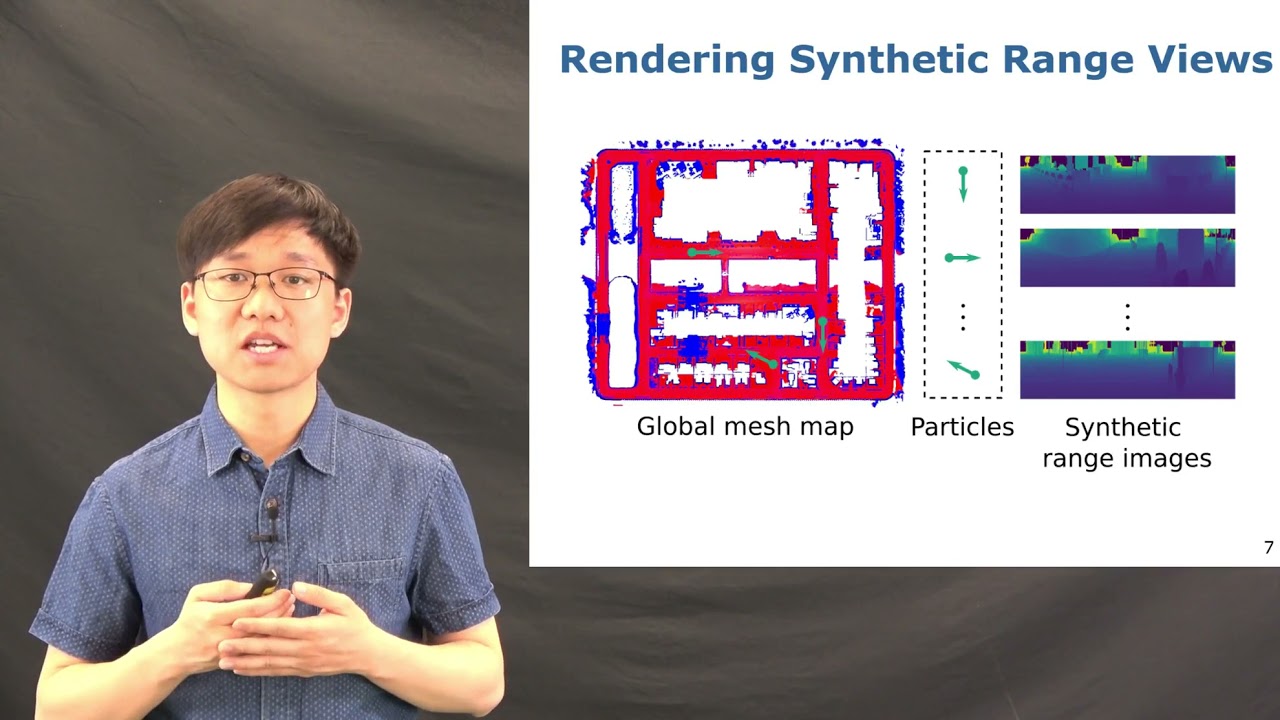

Talk by X. Chen: Range Image-based LiDAR Localization for Autonomous Vehicles (ICRA’21)

X. Chen, I. Vizzo, T. Läbe, J. Behley, and C. Stachniss, “Range Image-based LiDAR Localization for Autonomous Vehicles,” in Proceedings of the IEEE Int. Conf. on Robotics & Automation (ICRA), 2021. Paper: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/chen2021icra.pdf Code: https://github.com/PRBonn/range-mcl #UniBonn #StachnissLab #robotics #autonomouscars #neuralnetworks #talk

Talk by A. Reinke: Simple But Effective Redundant Odometry for Autonomous Vehicles (ICRA’21)

A. Reinke, X. Chen, and C. Stachniss, “Simple But Effective Redundant Odometry for Autonomous Vehicles,” in Proceedings of the IEEE Int. Conf. on Robotics & Automation (ICRA), 2021. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/reinke2021icra.pdf Code: https://github.com/PRBonn/MutiverseOdometry #UniBonn #StachnissLab #robotics #autonomouscars #talk

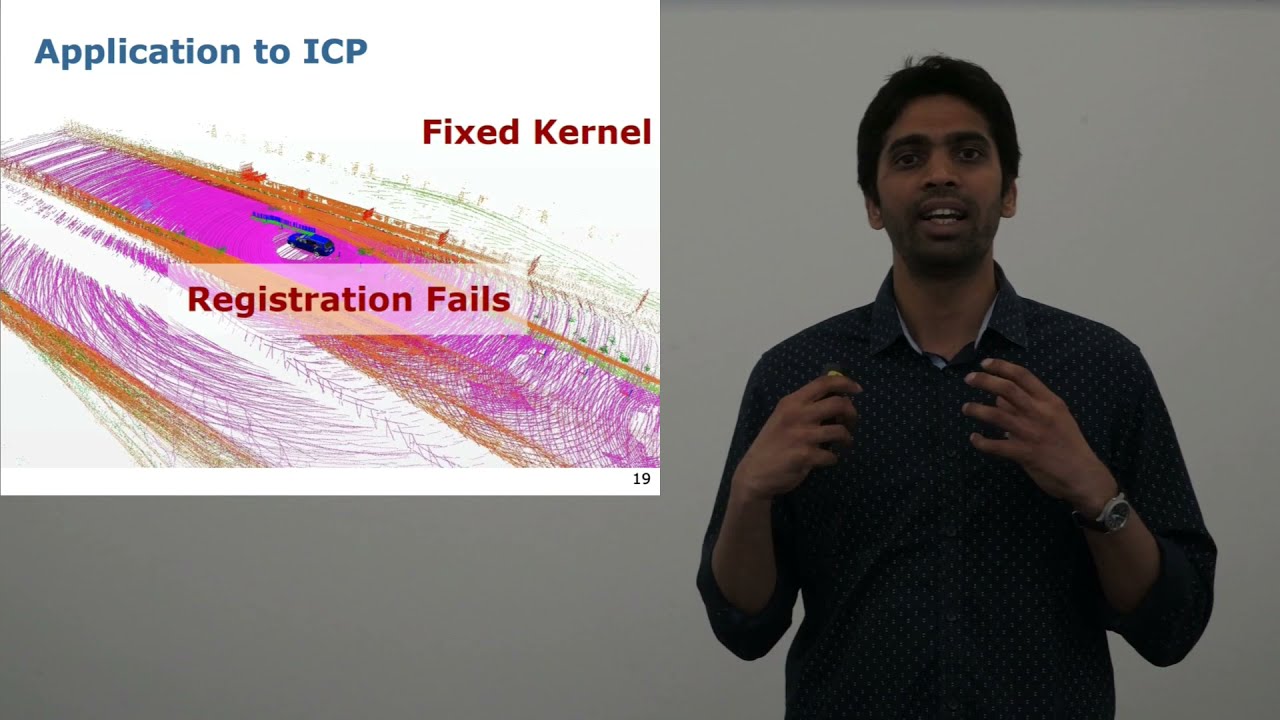

Talk by N. Chebrolu: Adaptive Robust Kernels for Non-Linear Least Squares Problems (RAL+ICRA’21)

N. Chebrolu, T. Läbe, O. Vysotska, J. Behley, and C. Stachniss, “Adaptive Robust Kernels for Non-Linear Least Squares Problems,” IEEE Robotics and Automation Letters (RA-L), vol. 6, pp. 2240-2247, 2021. doi:10.1109/LRA.2021.3061331 https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/chebrolu2021ral.pdf #UniBonn #StachnissLab #robotics #autonomouscars #slam #talk

ICRA’21: Range Image-based LiDAR Localization for Autonomous Vehicles by Chen et al.

X. Chen, I. Vizzo, T. Läbe, J. Behley, and C. Stachniss, “Range Image-based LiDAR Localization for Autonomous Vehicles,” in Proceedings of the IEEE Int. Conf. on Robotics & Automation (ICRA), 2021. https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/chen2021icra.pdf #UniBonn #StachnissLab #robotics #autonomouscars #talk

You Only Need Adversarial Supervision for Semantic Image Synthesis, ICLR 2021 (5 min overview)

We introduce OASIS, a novel GAN model for semantic image synthesis that needs only adversarial supervision to achieve state-of-the-art performance. OpenReview: https://openreview.net/forum?id=yvQKLaqNE6M Arxiv: https://arxiv.org/abs/2012.04781 Code: https://github.com/boschresearch/OASIS

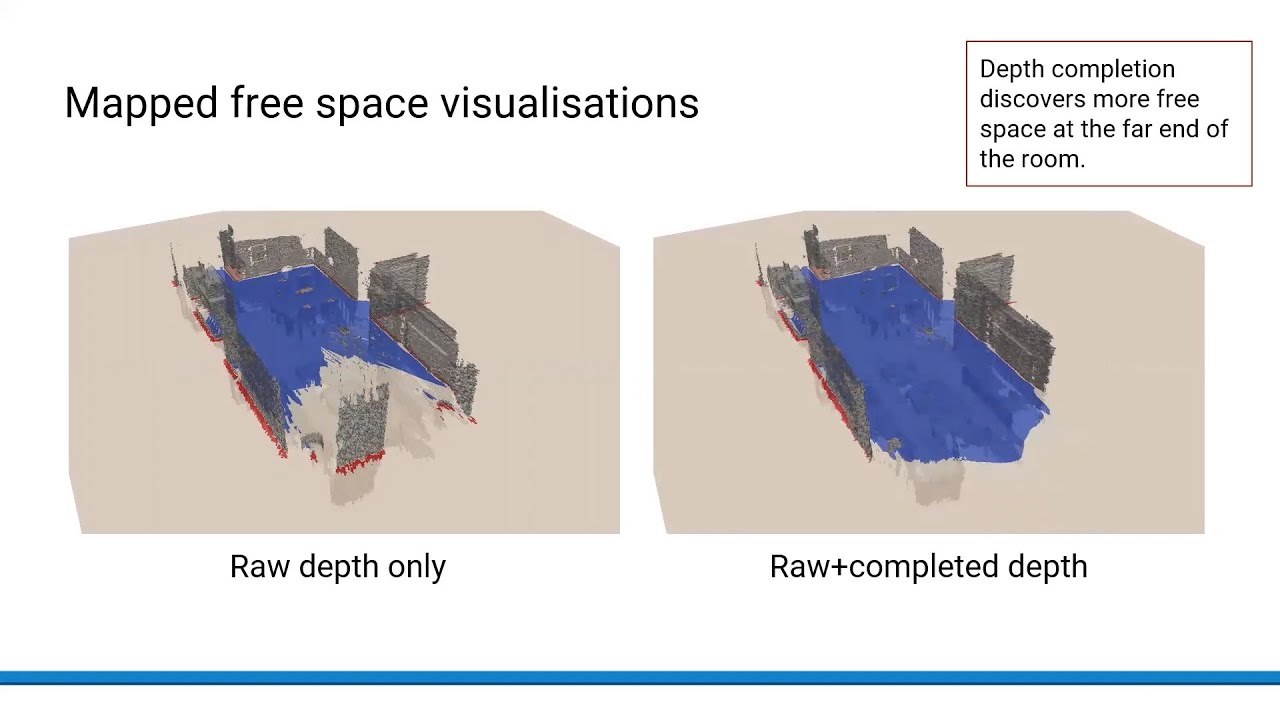

Volumetric Occupancy Mapping With Probabilistic Depth Completion for Robotic Navigation

Volumetric Occupancy Mapping With Probabilistic Depth Completion for Robotic Navigation Marija Popović, Florian Thomas, Sotiris Papatheodorou, Nils Funk, Teresa Vidal-Calleja and Stefan Leutenegger In robotic applications, a key requirement for safe and efficient motion planning is the ability to map obstacle-free space in unknown, cluttered 3D environments. However, commodity-grade RGB-D cameras commonly used for sensing fail to register valid depth values on shiny, glossy, bright, or distant surfaces, leading to missing data in the map. To address this issue, we propose a framework leveraging probabilistic depth completion as an additional input for spatial mapping. We introduce a deep learning architecture providing uncertainty estimates for the depth completion of RGB-D images. Our pipeline exploits the inferred missing depth values and depth uncertainty to complement raw depth images and improve the speed and quality of free space mapping. Evaluations on synthetic data show that our approach maps significantly more correct free space with relatively low error when compared against using raw data alone in different indoor environments; thereby producing more complete maps that can be directly used for robotic navigation tasks. The performance of our framework is validated using real-world data. Paper: https://arxiv.org/abs/2012.03023

EasyPBR: A Lightweight Physically-Based Renderer

Presentation for paper by Radu Alexandru Rosu and Sven Behnke: “EasyPBR: A Lightweight Physically-Based Renderer” 16th International Conference on Computer Graphics Theory and Applications (GRAPP), 2021 Modern rendering libraries provide unprecedented realism, producing real-time photorealistic 3D graphics on commodity hardware. Visual fidelity, however, comes at the cost of increased complexity and difficulty of usage, with many rendering parameters requiring a deep understanding of the pipeline. We propose EasyPBR as an alternative rendering library that strikes a balance between ease-of-use and visual quality. EasyPBR consists of a deferred renderer that implements recent state-of-the-art approaches in physically based rendering. It offers an easy-to-use Python and C++ interface that allows high-quality images to be created in only a few lines of code or directly through a graphical user interface. The user can choose between fully controlling the rendering pipeline or letting EasyPBR automatically infer the best parameters based on the current scene composition. The EasyPBR library can help the community to more easily leverage the power of current GPUs to create realistic images. These can then be used as synthetic data for deep learning or for creating animations for academic purposes. http://www.ais.uni-bonn.de/papers/GRAPP_2021_Rosu_EasyPBR.pdf

IROS’20: Segmentation-Based 4D Registration of Plants Point Clouds for Phenotyping by Magistri et al

F. Magistri, N. Chebrolu, and C. Stachniss, “Segmentation-Based 4D Registration of Plants Point Clouds ,” in Proceedings of the IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), 2020. Paper: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/magistri2020iros.pdf #UniBonn #StachnissLab #robotics #PhenoRob #talk

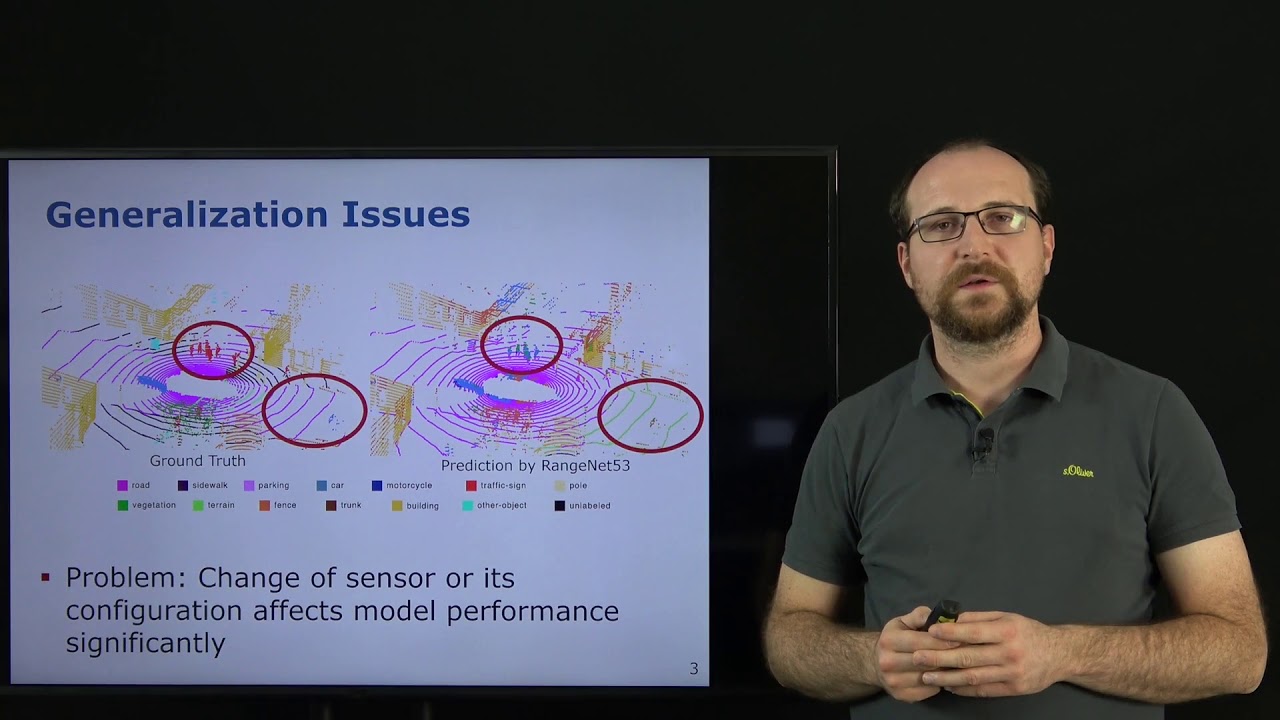

IROS’20: Domain Transfer for Semantic Segmentation of LiDAR Data using DNNs presented by J. Behley

F. Langer, A. Milioto, A. Haag, J. Behley, and C. Stachniss, “Domain Transfer for Semantic Segmentation of LiDAR Data using Deep Neural Networks,” in Proceedings of the IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), 2020. Paper: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/langer2020iros.pdf

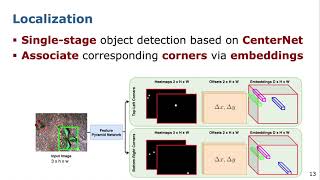

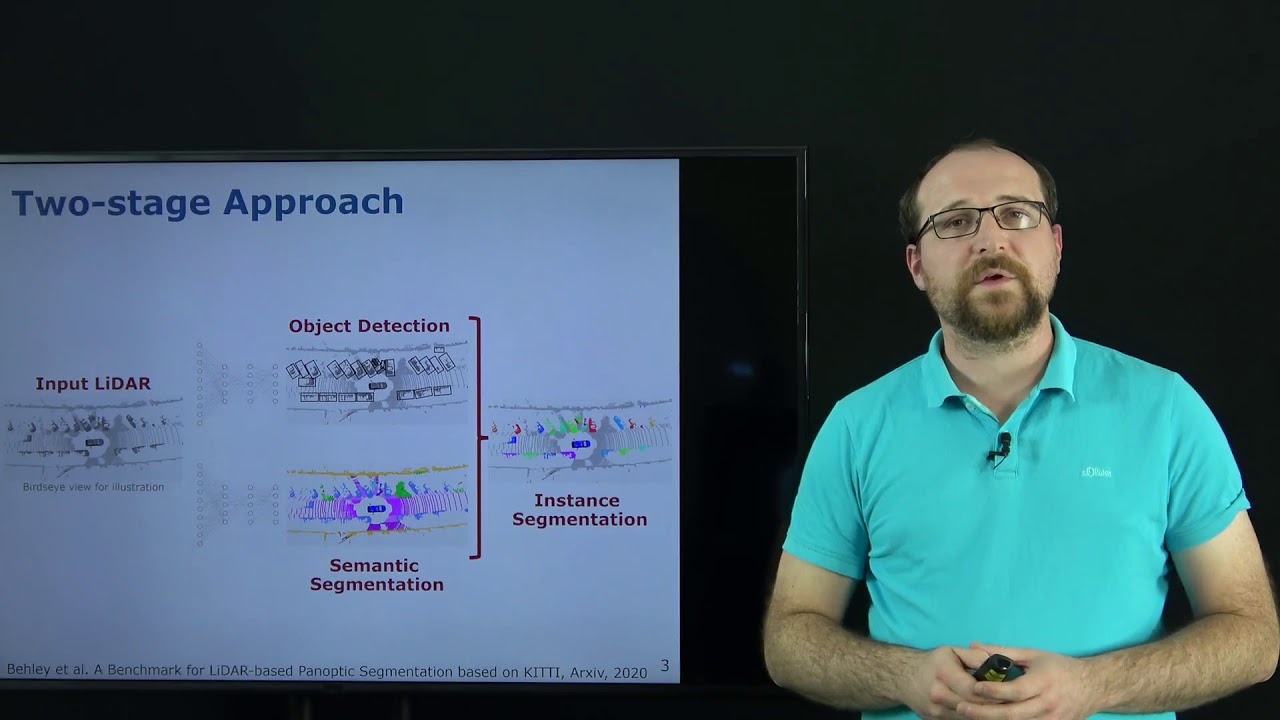

IROS’20: LiDAR Panoptic Segmentation for Autonomous Driving presented by J. Behley

Trailer video for the paper: A. Milioto, J. Behley, C. McCool, and C. Stachniss, “LiDAR Panoptic Segmentation for Autonomous Driving,” in Proceedings of the IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), 2020. Paper: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/milioto2020iros.pdf

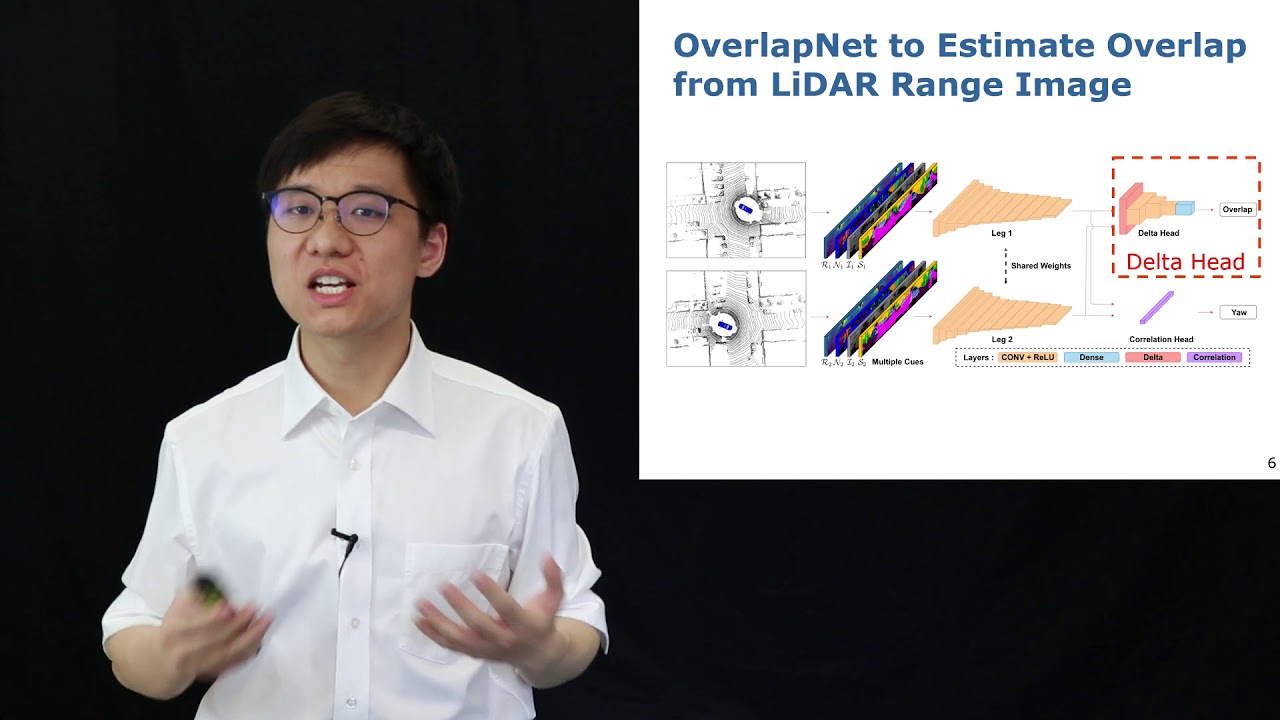

IROS’20: Learning an Overlap-based Observation Model for 3D LiDAR Localization by Chen et al.

Trailer Video for the work: X. Chen, T. Läbe, L. Nardi, J. Behley, and C. Stachniss, “Learning an Overlap-based Observation Model for 3D LiDAR Localization,” in Proceedings of the IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), 2020. Paper: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/chen2020iros.pdf Code available!

Online Object-Oriented Semantic Mapping with the Toyota HSR Robot

This video demonstrates our semantic mapping framework, which is capable of online mapping and object updating given object detections from RGB-D data and provides various 2D and 3D representations of the mapped objects. Our mapping system is highly efficient and achieves a run time of more than 10 Hz. This paper describes our framework in detail: https://arxiv.org/abs/2011.06895

Deep Learning for Non-Invasive Diagnosis of Nutrient Deficiencies in Sugar Beet Using RGB Images

Yi, J.; Krusenbaum, L.; Unger, P.; Hüging, H.; Seidel, S.J.; Schaaf, G.; Gall, J. Deep Learning for Non-Invasive Diagnosis of Nutrient Deficiencies in Sugar Beet Using RGB Images. Sensors 2020, 20, 5893. https://www.mdpi.com/1424-8220/20/20/5893

C. Pahmeyer, T. Kuhn & W. Britz – ‘Fruchtfolge’: A crop rotation decision support system (Trailer)

Watch the full presentation: http://digicrop.de/program/fruchtfolge-a-crop-rotation-decision-support-system-for-optimizing-cropping-choices-with-big-data-and-spatially-explicit-modeling/

Lukas Drees – Temporal Prediction & Evaluation of Brassica Growth in the Field using cGANs (Trailer)

Watch the full presentation: http://digicrop.de/program/temporal-prediction-and-evaluation-of-brassica-growth-in-the-field-using-conditional-generative-adversarial-networks/

Laura Zabawa – Counting grapevine berries in images via semantic segmentation

International Conference on Digital Technologies for Sustainable Crop Production (DIGICROP 2020) • November 1-10, 2020 • http://digicrop.de/

J. Yi – Deep learning for non-invasive diagnosis of nutrient deficiencies in sugar beet

International Conference on Digital Technologies for Sustainable Crop Production (DIGICROP 2020) • November 1-10, 2020 • http://digicrop.de/

Laura Zabawa – Counting grapevine berries in images via semantic segmentation (Trailer)

Watch the full presentation: http://digicrop.de/program/counting-grapevine-berries-in-images-via-semantic-segmentation/

D. Gogoll – Unsupervised Domain Adaptation for Transferring Plant Classification Systems (Trailer)

Watch the full presentation: http://digicrop.de/program/unsupervised-domain-adaptation-for-transferring-plant-classification-systems-to-new-field-environments-crops-and-robots/

Linmei Shang et al. – Adoption and diffusion of digital farming technologies (Trailer)

Watch the full presentation: http://digicrop.de/program/adoption-and-diffusion-of-digital-farming-technologies-integrating-farm-level-evidence-and-system-level-interaction/

Linmei Shang et al. – Adoption and diffusion of digital farming technologies

International Conference on Digital Technologies for Sustainable Crop Production (DIGICROP 2020) • November 1-10, 2020 • http://digicrop.de/

Nived Chebrolu – Spatio-temporal registration of plant point clouds for phenotyping (Talk)

International Conference on Digital Technologies for Sustainable Crop Production (DIGICROP 2020) • November 1-10, 2020 • http://digicrop.de/

Nived Chebrolu – Spatio-temporal registration of plant point clouds for phenotyping (Trailer)

Watch the full presentation: http://digicrop.de/program/spatio-temporal-registration-of-plant-point-clouds-for-phenotyping/

J. Weyler – Joint Plant Instance Detection & Leaf Count Estimation for Plant Phenotyping (Trailer)

Watch the full presentation: http://digicrop.de/program/joint-plant-instance-detection-and-leaf-count-estimation-for-in-field-plant-phenotyping/

Jan Weyler – Joint Plant Instance Detection and Leaf Count Estimation for In-Field Plant Phenotyping

International Conference on Digital Technologies for Sustainable Crop Production (DIGICROP 2020) • November 1-10, 2020 • http://digicrop.de/

Zoomless Maps: External Labeling Methods for the Interactive Exploration of Dense Point Sets at a F

Authors: Sven Gedicke, Annika Bonerath, Benjamin Niedermann, Jan-Henrik Haunert VIS website: http://ieeevis.org/year/2020/welcome Visualizing spatial data on small-screen devices such as smartphones and smartwatches poses new challenges in computational cartography. The current interfaces for map exploration require their users to zoom in and out frequently. Indeed, zooming and panning are tools suitable for choosing the map extent corresponding to an area of interest. They are not as suitable, however, for resolving the graphical clutter caused by a high feature density since zooming in to a large map scale leads to a loss of context. Therefore, in this paper, we present new external labeling methods that allow a user to navigate through dense sets of points of interest while keeping the current map extent fixed. We provide a unified model, in which labels are placed at the boundary of the map and visually associated with the corresponding features via connecting lines, which are called leaders. Since the screen space is limited, labeling all features at the same time is impractical. Therefore, at any time, we label a subset of the features. We offer interaction techniques to change the current selection of features systematically and, thus, give the user access to all features. We distinguish three methods, which allow the user either to slide the labels along the bottom side of the map or to browse the labels based on pages or stacks. We present a generic algorithmic framework that provides us with the possibility of expressing the different variants of interaction techniques as optimization problems in a unified way. We propose both exact algorithms and fast and simple heuristics that solve the optimization problems taking into account different criteria such as the ranking of the labels, the total leader length as well as the distance between leaders. In experiments on real-world data we evaluate these algorithms and discuss the three variants with respect to their strengths and weaknesses proving the flexibility of the presented algorithmic framework.

Talk by F. Magistri: Segmentation-Based 4D Registration of Plants Point Clouds (IROS’20)

Paper: F. Magistri, N. Chebrolu, and C. Stachniss, “Segmentation-Based 4D Registration of Plants Point Clouds for Phenotyping,” in Proceedings of the IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), 2020. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/magistri2020iros.pdf

Multi-Modal Deep Learning with Sentinel-3 Observations for the Detection of Oceanic Internal Waves

Presentation given by Lukas Drees at the ISPRS Congress 2020 Drees, L., Kusche, J., & Roscher, R. (2020). Multi-Modal Deep Learning with Sentinel-3 Observations for the Detection of Oceanic Internal Waves. ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, 2, 813-820.

Talk by J. Behley on Domain Transfer for Semantic Segmentation of LiDAR Data using DNNs… (IROS’20)

F. Langer, A. Milioto, A. Haag, J. Behley, and C. Stachniss, “Domain Transfer for Semantic Segmentation of LiDAR Data using Deep Neural Networks,” in Proceedings of the IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), 2020. PDF: https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/langer2020iros.pdf

Talk by X. Chen on Learning an Overlap-based Observation Model for 3D LiDAR Localization (IROS’20)